wmwgijol28 / openai-php

OpenAI PHP 是一个增强型的 PHP API 客户端,允许您与 Open AI API 进行交互,由 https://github.com/openai-php/client 分支而来

Requires

- php: ^7.3

- php-http/discovery: ^1.18.1

- php-http/multipart-stream-builder: ^1.3.0

- psr/http-client: ^1.0.2

- psr/http-client-implementation: ^1.0.1

- psr/http-factory-implementation: *

- psr/http-message: ^1.0.1|^2.0.0

Requires (Dev)

- guzzlehttp/guzzle: ^7.6.1

- guzzlehttp/psr7: ^2.5.0

- mockery/mockery: ^1.5

- nunomaduro/collision: ^5.11.0

- pestphp/pest: ^1.23.0

- phpstan/phpstan: ^1.10.15

- rector/rector: ^0.14.8

- symfony/var-dumper: ^5.4.24

This package is auto-updated.

Last update: 2024-09-30 02:05:25 UTC

README

OpenAI PHP 是一个增强型的 PHP API 客户端,允许您与 Open AI API 进行交互,由 https://github.com/openai-php/client 分支而来

目录

入门

需要 PHP 7.3+

首先,通过 Composer 包管理器安装 OpenAI

composer require wmwgijol28/openai-php

确保允许 php-http/discovery Composer 插件运行,或者如果您的项目尚未集成 PSR-18 客户端,则手动安装客户端。

composer require guzzlehttp/guzzle

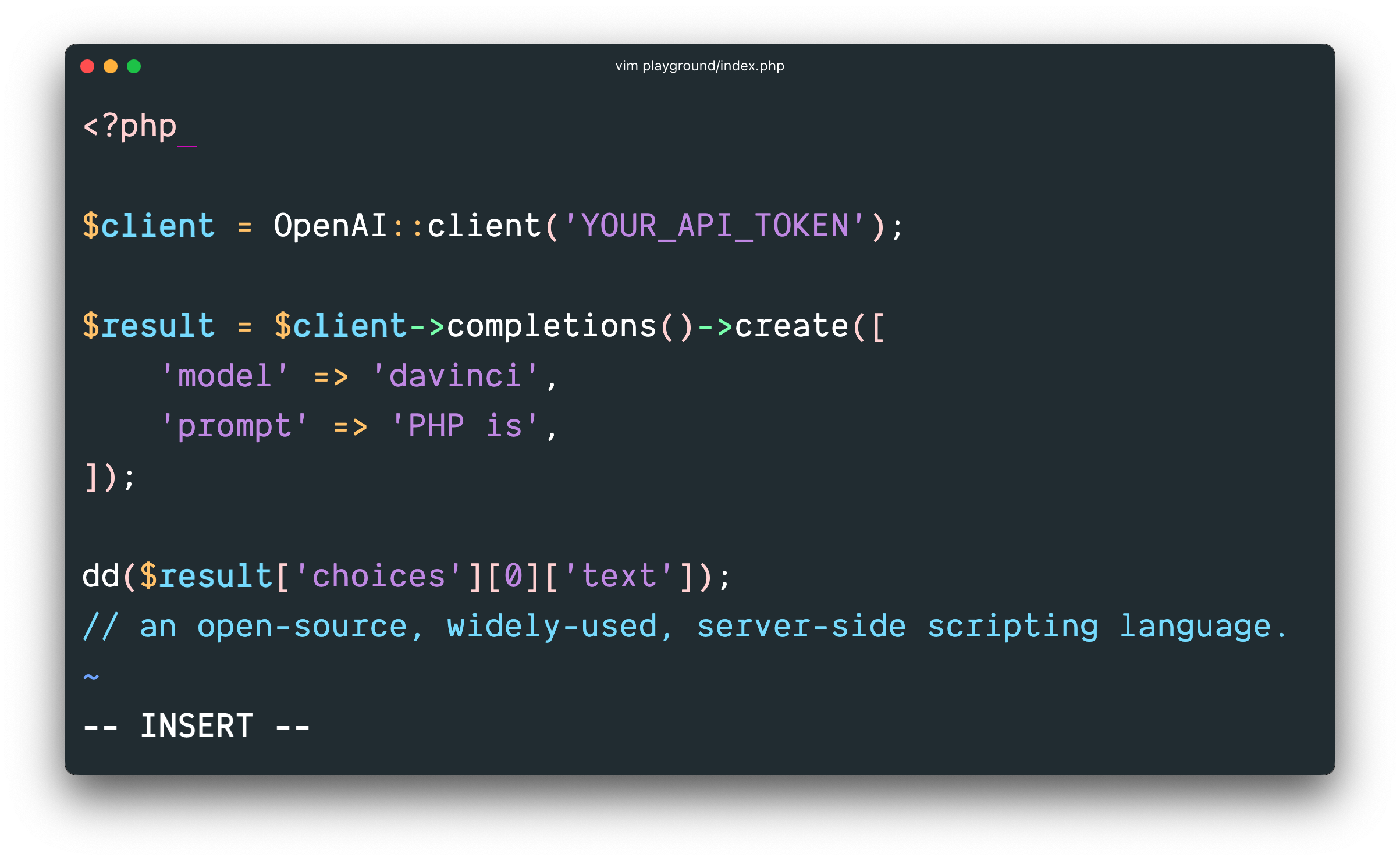

然后,与 OpenAI 的 API 进行交互

$yourApiKey = getenv('YOUR_API_KEY'); $client = OpenAI::client($yourApiKey); $result = $client->completions()->create([ 'model' => 'text-davinci-003', 'prompt' => 'PHP is', ]); echo $result['choices'][0]['text']; // an open-source, widely-used, server-side scripting language.

如果需要,可以配置和创建一个单独的客户端。

$yourApiKey = getenv('YOUR_API_KEY'); $client = OpenAI::factory() ->withApiKey($yourApiKey) ->withOrganization('your-organization') // default: null ->withBaseUri('openai.example.com/v1') // default: api.openai.com/v1 ->withHttpClient($client = new \GuzzleHttp\Client([])) // default: HTTP client found using PSR-18 HTTP Client Discovery ->withHttpHeader('X-My-Header', 'foo') ->withQueryParam('my-param', 'bar') ->withStreamHandler(fn (RequestInterface $request): ResponseInterface => $client->send($request, [ 'stream' => true // Allows to provide a custom stream handler for the http client. ])) ->make();

用法

Models 资源

list

列出当前可用的模型,并提供了每个模型的基本信息,如所有者和可用性。

$response = $client->models()->list(); $response->object; // 'list' foreach ($response->data as $result) { $result->id; // 'text-davinci-003' $result->object; // 'model' // ... } $response->toArray(); // ['object' => 'list', 'data' => [...]]

retrieve

检索一个模型实例,提供有关模型的基本信息,如所有者和权限。

$response = $client->models()->retrieve('text-davinci-003'); $response->id; // 'text-davinci-003' $response->object; // 'model' $response->created; // 1642018370 $response->ownedBy; // 'openai' $response->root; // 'text-davinci-003' $response->parent; // null foreach ($response->permission as $result) { $result->id; // 'modelperm-7E53j9OtnMZggjqlwMxW4QG7' $result->object; // 'model_permission' $result->created; // 1664307523 $result->allowCreateEngine; // false $result->allowSampling; // true $result->allowLogprobs; // true $result->allowSearchIndices; // false $result->allowView; // true $result->allowFineTuning; // false $result->organization; // '*' $result->group; // null $result->isBlocking; // false } $response->toArray(); // ['id' => 'text-davinci-003', ...]

delete

删除微调模型。

$response = $client->models()->delete('curie:ft-acmeco-2021-03-03-21-44-20'); $response->id; // 'curie:ft-acmeco-2021-03-03-21-44-20' $response->object; // 'model' $response->deleted; // true $response->toArray(); // ['id' => 'curie:ft-acmeco-2021-03-03-21-44-20', ...]

Completions 资源

create

为提供的提示和参数创建一个完成。

$response = $client->completions()->create([ 'model' => 'text-davinci-003', 'prompt' => 'Say this is a test', 'max_tokens' => 6, 'temperature' => 0 ]); $response->id; // 'cmpl-uqkvlQyYK7bGYrRHQ0eXlWi7' $response->object; // 'text_completion' $response->created; // 1589478378 $response->model; // 'text-davinci-003' foreach ($response->choices as $result) { $result->text; // '\n\nThis is a test' $result->index; // 0 $result->logprobs; // null $result->finishReason; // 'length' or null } $response->usage->promptTokens; // 5, $response->usage->completionTokens; // 6, $response->usage->totalTokens; // 11 $response->toArray(); // ['id' => 'cmpl-uqkvlQyYK7bGYrRHQ0eXlWi7', ...]

create streamed

为提供的提示和参数创建一个流式完成。

$stream = $client->completions()->createStreamed([ 'model' => 'text-davinci-003', 'prompt' => 'Hi', 'max_tokens' => 10, ]); foreach($stream as $response){ $response->choices[0]->text; } // 1. iteration => 'I' // 2. iteration => ' am' // 3. iteration => ' very' // 4. iteration => ' excited' // ...

Chat 资源

create

为聊天消息创建一个完成。

$response = $client->chat()->create([ 'model' => 'gpt-3.5-turbo', 'messages' => [ ['role' => 'user', 'content' => 'Hello!'], ], ]); $response->id; // 'chatcmpl-6pMyfj1HF4QXnfvjtfzvufZSQq6Eq' $response->object; // 'chat.completion' $response->created; // 1677701073 $response->model; // 'gpt-3.5-turbo-0301' foreach ($response->choices as $result) { $result->index; // 0 $result->message->role; // 'assistant' $result->message->content; // '\n\nHello there! How can I assist you today?' $result->finishReason; // 'stop' } $response->usage->promptTokens; // 9, $response->usage->completionTokens; // 12, $response->usage->totalTokens; // 21 $response->toArray(); // ['id' => 'chatcmpl-6pMyfj1HF4QXnfvjtfzvufZSQq6Eq', ...]

created streamed

为聊天消息创建一个流式完成。

$stream = $client->chat()->createStreamed([ 'model' => 'gpt-4', 'messages' => [ ['role' => 'user', 'content' => 'Hello!'], ], ]); foreach($stream as $response){ $response->choices[0]->toArray(); } // 1. iteration => ['index' => 0, 'delta' => ['role' => 'assistant'], 'finish_reason' => null] // 2. iteration => ['index' => 0, 'delta' => ['content' => 'Hello'], 'finish_reason' => null] // 3. iteration => ['index' => 0, 'delta' => ['content' => '!'], 'finish_reason' => null] // ...

Audio 资源

transcribe

将音频转录为输入语言。

$response = $client->audio()->transcribe([ 'model' => 'whisper-1', 'file' => fopen('audio.mp3', 'r'), 'response_format' => 'verbose_json', ]); $response->task; // 'transcribe' $response->language; // 'english' $response->duration; // 2.95 $response->text; // 'Hello, how are you?' foreach ($response->segments as $segment) { $segment->index; // 0 $segment->seek; // 0 $segment->start; // 0.0 $segment->end; // 4.0 $segment->text; // 'Hello, how are you?' $segment->tokens; // [50364, 2425, 11, 577, 366, 291, 30, 50564] $segment->temperature; // 0.0 $segment->avgLogprob; // -0.45045216878255206 $segment->compressionRatio; // 0.7037037037037037 $segment->noSpeechProb; // 0.1076972484588623 $segment->transient; // false } $response->toArray(); // ['task' => 'transcribe', ...]

translate

将音频翻译成英语。

$response = $client->audio()->translate([ 'model' => 'whisper-1', 'file' => fopen('german.mp3', 'r'), 'response_format' => 'verbose_json', ]); $response->task; // 'translate' $response->language; // 'english' $response->duration; // 2.95 $response->text; // 'Hello, how are you?' foreach ($response->segments as $segment) { $segment->index; // 0 $segment->seek; // 0 $segment->start; // 0.0 $segment->end; // 4.0 $segment->text; // 'Hello, how are you?' $segment->tokens; // [50364, 2425, 11, 577, 366, 291, 30, 50564] $segment->temperature; // 0.0 $segment->avgLogprob; // -0.45045216878255206 $segment->compressionRatio; // 0.7037037037037037 $segment->noSpeechProb; // 0.1076972484588623 $segment->transient; // false } $response->toArray(); // ['task' => 'translate', ...]

Edits 资源

create

为提供的输入、指令和参数创建一个新的编辑。

$response = $client->edits()->create([ 'model' => 'text-davinci-edit-001', 'input' => 'What day of the wek is it?', 'instruction' => 'Fix the spelling mistakes', ]); $response->object; // 'edit' $response->created; // 1589478378 foreach ($response->choices as $result) { $result->text; // 'What day of the week is it?' $result->index; // 0 } $response->usage->promptTokens; // 25, $response->usage->completionTokens; // 32, $response->usage->totalTokens; // 57 $response->toArray(); // ['object' => 'edit', ...]

Embeddings 资源

create

创建一个表示输入文本的嵌入向量。

$response = $client->embeddings()->create([ 'model' => 'text-similarity-babbage-001', 'input' => 'The food was delicious and the waiter...', ]); $response->object; // 'list' foreach ($response->embeddings as $embedding) { $embedding->object; // 'embedding' $embedding->embedding; // [0.018990106880664825, -0.0073809814639389515, ...] $embedding->index; // 0 } $response->usage->promptTokens; // 8, $response->usage->totalTokens; // 8 $response->toArray(); // ['data' => [...], ...]

Files 资源

list

返回属于用户组织的文件列表。

$response = $client->files()->list(); $response->object; // 'list' foreach ($response->data as $result) { $result->id; // 'file-XjGxS3KTG0uNmNOK362iJua3' $result->object; // 'file' // ... } $response->toArray(); // ['object' => 'list', 'data' => [...]]

delete

删除文件。

$response = $client->files()->delete($file); $response->id; // 'file-XjGxS3KTG0uNmNOK362iJua3' $response->object; // 'file' $response->deleted; // true $response->toArray(); // ['id' => 'file-XjGxS3KTG0uNmNOK362iJua3', ...]

retrieve

返回有关特定文件的信息。

$response = $client->files()->retrieve('file-XjGxS3KTG0uNmNOK362iJua3'); $response->id; // 'file-XjGxS3KTG0uNmNOK362iJua3' $response->object; // 'file' $response->bytes; // 140 $response->createdAt; // 1613779657 $response->filename; // 'mydata.jsonl' $response->purpose; // 'fine-tune' $response->status; // 'succeeded' $response->status_details; // null $response->toArray(); // ['id' => 'file-XjGxS3KTG0uNmNOK362iJua3', ...]

upload

上传包含要用于各种端点/功能的文档的文件。

$response = $client->files()->upload([ 'purpose' => 'fine-tune', 'file' => fopen('my-file.jsonl', 'r'), ]); $response->id; // 'file-XjGxS3KTG0uNmNOK362iJua3' $response->object; // 'file' $response->bytes; // 140 $response->createdAt; // 1613779657 $response->filename; // 'mydata.jsonl' $response->purpose; // 'fine-tune' $response->status; // 'succeeded' $response->status_details; // null $response->toArray(); // ['id' => 'file-XjGxS3KTG0uNmNOK362iJua3', ...]

download

返回指定文件的内容。

$client->files()->download($file); // '{"prompt": "<prompt text>", ...'

FineTunes 资源

create

创建一个作业,从给定的数据集中微调指定的模型。

$response = $client->fineTunes()->create([ 'training_file' => 'file-ajSREls59WBbvgSzJSVWxMCB', 'validation_file' => 'file-XjSREls59WBbvgSzJSVWxMCa', 'model' => 'curie', 'n_epochs' => 4, 'batch_size' => null, 'learning_rate_multiplier' => null, 'prompt_loss_weight' => 0.01, 'compute_classification_metrics' => false, 'classification_n_classes' => null, 'classification_positive_class' => null, 'classification_betas' => [], 'suffix' => null, ]); $response->id; // 'ft-AF1WoRqd3aJAHsqc9NY7iL8F' $response->object; // 'fine-tune' // ... $response->toArray(); // ['id' => 'ft-AF1WoRqd3aJAHsqc9NY7iL8F', ...]

list

列出您组织的微调作业。

$response = $client->fineTunes()->list(); $response->object; // 'list' foreach ($response->data as $result) { $result->id; // 'ft-AF1WoRqd3aJAHsqc9NY7iL8F' $result->object; // 'fine-tune' // ... } $response->toArray(); // ['object' => 'list', 'data' => [...]]

retrieve

获取微调作业的信息。

$response = $client->fineTunes()->retrieve('ft-AF1WoRqd3aJAHsqc9NY7iL8F'); $response->id; // 'ft-AF1WoRqd3aJAHsqc9NY7iL8F' $response->object; // 'fine-tune' $response->model; // 'curie' $response->createdAt; // 1614807352 $response->fineTunedModel; // 'curie => ft-acmeco-2021-03-03-21-44-20' $response->organizationId; // 'org-jwe45798ASN82s' $response->resultFiles; // [ $response->status; // 'succeeded' $response->validationFiles; // [ $response->trainingFiles; // [ $response->updatedAt; // 1614807865 foreach ($response->events as $result) { $result->object; // 'fine-tune-event' $result->createdAt; // 1614807352 $result->level; // 'info' $result->message; // 'Job enqueued. Waiting for jobs ahead to complete. Queue number => 0.' } $response->hyperparams->batchSize; // 4 $response->hyperparams->learningRateMultiplier; // 0.1 $response->hyperparams->nEpochs; // 4 $response->hyperparams->promptLossWeight; // 0.1 foreach ($response->resultFiles as $result) { $result->id; // 'file-XjGxS3KTG0uNmNOK362iJua3' $result->object; // 'file' $result->bytes; // 140 $result->createdAt; // 1613779657 $result->filename; // 'mydata.jsonl' $result->purpose; // 'fine-tune' $result->status; // 'succeeded' $result->status_details; // null } foreach ($response->validationFiles as $result) { $result->id; // 'file-XjGxS3KTG0uNmNOK362iJua3' // ... } foreach ($response->trainingFiles as $result) { $result->id; // 'file-XjGxS3KTG0uNmNOK362iJua3' // ... } $response->toArray(); // ['id' => 'ft-AF1WoRqd3aJAHsqc9NY7iL8F', ...]

cancel

立即取消微调作业。

$response = $client->fineTunes()->cancel('ft-AF1WoRqd3aJAHsqc9NY7iL8F'); $response->id; // 'ft-AF1WoRqd3aJAHsqc9NY7iL8F' $response->object; // 'fine-tune' // ... $response->status; // 'cancelled' // ... $response->toArray(); // ['id' => 'ft-AF1WoRqd3aJAHsqc9NY7iL8F', ...]

list events

获取微调作业的细粒度状态更新。

$response = $client->fineTunes()->listEvents('ft-AF1WoRqd3aJAHsqc9NY7iL8F'); $response->object; // 'list' foreach ($response->data as $result) { $result->object; // 'fine-tune-event' $result->createdAt; // 1614807352 // ... } $response->toArray(); // ['object' => 'list', 'data' => [...]]

list events streamed

获取微调作业的流式细粒度状态更新。

$stream = $client->fineTunes()->listEventsStreamed('ft-y3OpNlc8B5qBVGCCVsLZsDST'); foreach($stream as $response){ $response->message; } // 1. iteration => 'Created fine-tune: ft-y3OpNlc8B5qBVGCCVsLZsDST' // 2. iteration => 'Fine-tune costs $0.00' // ... // xx. iteration => 'Uploaded result file: file-ajLKUCMsFPrT633zqwr0eI4l' // xx. iteration => 'Fine-tune succeeded'

Moderations 资源

create

分类文本是否违反 OpenAI 的内容政策。

$response = $client->moderations()->create([ 'model' => 'text-moderation-latest', 'input' => 'I want to k*** them.', ]); $response->id; // modr-5xOyuS $response->model; // text-moderation-003 foreach ($response->results as $result) { $result->flagged; // true foreach ($result->categories as $category) { $category->category->value; // 'violence' $category->violated; // true $category->score; // 0.97431367635727 } } $response->toArray(); // ['id' => 'modr-5xOyuS', ...]

Images 资源

create

根据提示创建图像。

$response = $client->images()->create([ 'prompt' => 'A cute baby sea otter', 'n' => 1, 'size' => '256x256', 'response_format' => 'url', ]); $response->created; // 1589478378 foreach ($response->data as $data) { $data->url; // 'https://oaidalleapiprodscus.blob.core.windows.net/private/...' $data->b64_json; // null } $response->toArray(); // ['created' => 1589478378, data => ['url' => 'https://oaidalleapiprodscus...', ...]]

edit

根据原始图像和提示创建一个编辑或扩展的图像。

$response = $client->images()->edit([ 'image' => fopen('image_edit_original.png', 'r'), 'mask' => fopen('image_edit_mask.png', 'r'), 'prompt' => 'A sunlit indoor lounge area with a pool containing a flamingo', 'n' => 1, 'size' => '256x256', 'response_format' => 'url', ]); $response->created; // 1589478378 foreach ($response->data as $data) { $data->url; // 'https://oaidalleapiprodscus.blob.core.windows.net/private/...' $data->b64_json; // null } $response->toArray(); // ['created' => 1589478378, data => ['url' => 'https://oaidalleapiprodscus...', ...]]

variation

创建给定图像的变体。

$response = $client->images()->variation([ 'image' => fopen('image_edit_original.png', 'r'), 'n' => 1, 'size' => '256x256', 'response_format' => 'url', ]); $response->created; // 1589478378 foreach ($response->data as $data) { $data->url; // 'https://oaidalleapiprodscus.blob.core.windows.net/private/...' $data->b64_json; // null } $response->toArray(); // ['created' => 1589478378, data => ['url' => 'https://oaidalleapiprodscus...', ...]]

测试

该软件包提供了一个 OpenAI\Client 类的模拟实现,允许您模拟 API 响应。

为了测试您的代码,确保在测试用例中将OpenAI\Client类与OpenAI\Testing\ClientFake类进行交换。

伪造的响应将按创建伪造客户端时提供的顺序返回。

所有响应都包含一个fake()方法,它允许您通过仅提供与您的测试用例相关的参数来轻松创建响应对象。

use OpenAI\Testing\ClientFake; use OpenAI\Responses\Completions\CreateResponse; $client = new ClientFake([ CreateResponse::fake([ 'choices' => [ [ 'text' => 'awesome!', ], ], ]), ]); $completion = $client->completions()->create([ 'model' => 'text-davinci-003', 'prompt' => 'PHP is ', ]); expect($completion['choices'][0]['text'])->toBe('awesome!');

在流式响应的情况下,您可以可选地提供包含伪造响应数据的资源。

use OpenAI\Testing\ClientFake; use OpenAI\Responses\Chat\CreateStreamedResponse; $client = new ClientFake([ CreateStreamedResponse::fake(fopen('file.txt', 'r');); ]); $completion = $client->chat()->createStreamed([ 'model' => 'gpt-3.5-turbo', 'messages' => [ ['role' => 'user', 'content' => 'Hello!'], ], ]); expect($response->getIterator()->current()) ->id->toBe('chatcmpl-6yo21W6LVo8Tw2yBf7aGf2g17IeIl');

在请求发送后,有多种方法可以确保发送了预期的请求。

// assert completion create request was sent $client->assertSent(Completions::class, function (string $method, array $parameters): bool { return $method === 'create' && $parameters['model'] === 'text-davinci-003' && $parameters['prompt'] === 'PHP is '; }); // or $client->completions()->assertSent(function (string $method, array $parameters): bool { // ... }); // assert 2 completion create requests were sent $client->assertSent(Completions::class, 2); // assert no completion create requests were sent $client->assertNotSent(Completions::class); // or $client->completions()->assertNotSent(); // assert no requests were sent $client->assertNothingSent();

要编写期望API请求失败的测试,可以将Throwable对象作为响应提供。

$client = new ClientFake([ new \OpenAI\Exceptions\ErrorException([ 'message' => 'The model `gpt-1` does not exist', 'type' => 'invalid_request_error', 'code' => null, ]) ]); // the `ErrorException` will be thrown $completion = $client->completions()->create([ 'model' => 'text-davinci-003', 'prompt' => 'PHP is ', ]);

服务

Azure

为了使用Azure OpenAI服务,必须使用工厂手动构建客户端。

$client = OpenAI::factory() ->withBaseUri('{your-resource-name}.openai.azure.com/openai/deployments/{deployment-id}') ->withHttpHeader('api-key', '{your-api-key}') ->withQueryParam('api-version', '{version}') ->make();

为了使用Azure,您必须部署一个模型,该模型通过{deployment-id}标识,已经集成到API调用中。因此,在调用中不需要提供模型,因为它已包含在BaseUri中。

因此,一个基本的样本完成调用将是

$result = $client->completions()->create([ 'prompt' => 'PHP is' ]);

OpenAI PHP是一个开源软件,许可协议为MIT许可。