jbzoo csv-blueprint

基于自定义规则的CSV文件验证和生成CLI工具。它确保您的数据符合指定标准,简化数据管理和完整性检查。

Requires

- php: ^8.2

- ext-mbstring: *

- fidry/cpu-core-counter: ^1.1.0

- giggsey/libphonenumber-for-php-lite: ^8.13.35

- jbzoo/ci-report-converter: ^7.2.1

- jbzoo/cli: ^7.2.2

- jbzoo/data: ^7.1.1

- jbzoo/utils: ^7.2.1

- league/csv: ^9.15.0

- markrogoyski/math-php: ^2.10.0

- respect/validation: ^2.3.7

- symfony/filesystem: ^7.0.6

- symfony/finder: ^7.0.0

- symfony/yaml: ^7.0.3

Requires (Dev)

- fakerphp/faker: ^1.23.1

- jbzoo/codestyle: ^7.1.2

- jbzoo/toolbox-dev: ^7.1.0

- phpstan/phpstan: 1.11.1

- roave/security-advisories: dev-latest

- dev-master / 7.x-dev

- 1.0.3

- 1.0.2

- 1.0.1

- 1.0.0

- 0.50

- 0.49

- 0.48

- 0.47

- 0.46

- 0.45

- 0.44

- 0.43

- 0.42

- 0.41

- 0.40

- 0.39

- 0.38

- 0.37

- 0.36

- 0.35

- 0.34

- 0.33

- 0.32

- 0.31

- 0.30

- 0.29

- 0.28

- 0.27

- 0.26

- 0.25

- 0.24

- 0.23

- 0.22

- 0.21

- 0.20

- 0.19

- 0.18

- 0.17

- 0.16

- 0.15

- 0.14

- 0.12

- 0.11

- 0.10

- 0.9

- 0.8

- 0.7

- 0.6

- 0.5.1

- 0.5

- 0.4

- 0.3

- 0.2

- 0.1

- dev-fix-failed-test

- dev-parallel-optional

- dev-ds-vector

This package is auto-updated.

Last update: 2024-09-06 07:58:26 UTC

README

基于可自定义Yaml架构的严格且自动化的逐行CSV验证工具。

几秒钟内,确保每字节文件中的每个字符都符合您的预期。

我相信这是世界上最简单、最灵活、功能最强大的CSV验证器。☺️

功能

- 只需创建一个简单且友好的Yaml格式的CSV架构,工具就会逐行验证您的文件。您将获得一份非常详细的报告,其中包括行、列和规则的准确性。

- 开箱即用,您可以使用超过330个验证规则,这些规则可以组合起来控制验证的严重性。

- 您可以验证每个值(例如,每行的日期具有严格的格式),或者整个列(例如,所有值的平均值在限制内)。您可以选择规则的严重性。

- 由于其封装在Docker中,甚至可以作为您GitHub Actions的一部分使用。

- 在您的管道/ETL/CI中创建CSV文件,并确保其符合最严格的预期。

- 使用预设准备具有复杂规则的库。这将帮助您同时处理数百个不同的文件。

- 即时创建架构,基于现有的CSV文件,并分析CSV中的数据——找出文件中存储的内容,并获得总结报告。

点击查看典型的跨团队工作流程

-

数据准备:团队A生成符合预定义格式的CSV数据,并将文件放置在团队B可访问的共享位置(例如,共享存储库或云存储)。

-

通知:团队A通过公司沟通渠道(电子邮件、聊天、任务管理系统)通知团队B数据已准备就绪。

-

验证:团队B使用存储在存储库中的预定义验证规则检查CSV文件的准确性,并在导入之前进行完整性检查。这包括验证日期格式、数值和必需列的存在。

-

数据导入:在验证成功后,团队B将数据从CSV文件导入其系统以进行进一步处理。

-

错误处理:如果验证发现错误,则流程停止,团队B向团队A提供反馈以纠正数据。

为什么验证是必要的

- 减少错误:在导入前验证数据可以最大限度地减少错误的可能性,从而提高数据质量。

- 效率:防止在导入后手动纠正错误所造成的时间损失。

- 数据一致性:确保数据符合团队B的预期和要求,便于准确处理和分析。

- 自动化:将验证规则存储在存储库中简化了检查自动化并简化了更新验证标准的过程。

实时演示

作为一个演示工具如何工作的现场演示,您可以在这里探索超级最小化仓库。对于更复杂的示例和各种报告方法,请查看具有不同报告类型的演示管道。

另请参阅

- 作为现场演示的PR - 注意PR中的Diff自动注释。

- .github/workflows/demo.yml

- demo_invalid.yml

- demo_valid.yml

- demo.csv

目录

使用方法

Docker容器

确保您的机器上已安装Docker。

# Pull the Docker image docker pull jbzoo/csv-blueprint:latest # Run the tool inside Docker docker run --rm \ --workdir=/parent-host \ -v $(pwd):/parent-host \ jbzoo/csv-blueprint:latest \ validate-csv \ # See available commands and options below. --csv=./tests/fixtures/demo.csv \ # Your CSV(s). --schema=./tests/schemas/demo_invalid.yml \ # Your schema(s). --ansi # OR build it from source. git clone git@github.com:jbzoo/csv-blueprint.git csv-blueprint cd csv-blueprint make docker-build # local tag is "jbzoo/csv-blueprint:local"

GitHub动作

您可以在工作流程演示中找到启动示例。

- uses: jbzoo/csv-blueprint@master # See the specific version on releases page. `@master` is latest. with: # Specify the path(s) to the CSV files you want to validate. # This can include a direct path to a file or a directory to search with a maximum depth of 10 levels. # Examples: p/file.csv; p/*.csv; p/**/*.csv; p/**/name-*.csv; **/*.csv csv: './tests/**/*.csv' # Specify the path(s) to the schema file(s), supporting YAML, JSON, or PHP formats. # Similar to CSV paths, you can direct to specific files or search directories with glob patterns. # Examples: p/file.yml; p/*.yml; p/**/*.yml; p/**/name-*.yml; **/*.yml schema: './tests/**/*.yml' # Report format. Available options: text, table, github, gitlab, teamcity, junit. # Default value: 'table' report: 'table' # Apply all schemas (also without `filename_pattern`) to all CSV files found as global rules. # Available options: # auto: If no glob pattern (*) is used for --schema, the schema is applied to all found CSV files. # yes: Apply all schemas to all CSV files, Schemas without `filename_pattern` are applied as a global rule. # no: Apply only schemas with not empty `filename_pattern` and match the CSV files. # Default value: 'auto' apply-all: 'auto' # Quick mode. It will not validate all rows. It will stop after the first error. # Default value: 'no' quick: 'no' # Skip schema validation. If you are sure that the schema is correct, you can skip this check. # Default value: 'no' skip-schema: 'no' # Extra options for the CSV Blueprint. Only for debbuging and profiling. # Available options: # Add flag `--parallel` if you want to validate CSV files in parallel. # Add flag `--dump-schema` if you want to see the final schema after all includes and inheritance. # Add flag `--debug` if you want to see more really deep details. # Add flag `--profile` if you want to see profiling info. Add details with `-vvv`. # Verbosity level: Available options: `-v`, `-vv`, `-vvv` # ANSI output. You can disable ANSI colors if you want with `--no-ansi`. # Default value: 'options: --ansi' # You can skip it. extra: 'options: --ansi'

Phar二进制文件

点击查看使用PHAR文件的方法

确保您的机器上已安装PHP。

# Just download the latest version wget https://github.com/jbzoo/csv-blueprint/releases/latest/download/csv-blueprint.phar chmod +x ./csv-blueprint.phar ./csv-blueprint.phar validate-csv \ --csv=./tests/fixtures/demo.csv \ --schema=./tests/schemas/demo_invalid.yml # OR create project via Composer (--no-dev is optional) composer create-project --no-dev jbzoo/csv-blueprint cd ./csv-blueprint ./csv-blueprint validate-csv \ --csv=./tests/fixtures/demo.csv \ --schema=./tests/schemas/demo_invalid.yml # OR build from source git clone git@github.com:jbzoo/csv-blueprint.git csv-blueprint cd csv-blueprint make build ./csv-blueprint validate-csv \ --csv=./tests/fixtures/demo.csv \ --schema=./tests/schemas/demo_invalid.yml

模式定义

使用YAML定义您的CSV验证模式,以实现清晰和结构化的配置。也支持其他格式:JSON和PHP,以满足各种偏好和工作流需求。

提供的示例说明了具有标题行的CSV文件的方案。它强制要求id列不能为空,并且只能包含整数值。此外,name列必须至少有3个字符的长度,以确保基本数据完整性和实用性。

YAML示例方案

name: Simple CSV Schema filename_pattern: /my-favorite-csv-\d+\.csv$/i csv: delimiter: ';' columns: - name: id rules: not_empty: true is_int: true aggregate_rules: is_unique: true sorted: [ asc, numeric ] - name: name rules: length_min: 3 aggregate_rules: count: 10

完整方案描述

在示例YAML文件中提供了所有功能的详细描述。该文档通过自动化测试进行验证,确保其保持最新。

注意

- 有意省略了列的传统类型(例如,

type: integer),以规则为主。这些规则可以自由排序和组合,为CSV文件验证提供广泛的灵活性。 - 除非另有说明,所有选项都是可选的。您可以根据需要包含或省略它们。

- 指定错误的规则名称、使用不存在的值(未列在下文)或为任何选项分配不兼容的变量类型会导致方案验证错误。您可以选择使用

--skip-schema标志来绕过这些错误,允许您在方案中使用自定义键。 - 除了

not_empty之外,所有规则都忽略空字符串。它不忽略空字符串(长度为0)。要强制非空值,请应用not_empty: true。请注意,单个空格算作一个字符,使得字符串长度为1。为防止此类情况,请包含is_trimmed: true。 - 规则独立操作;它们彼此之间没有知识或影响。

- 当规则的值是

is_some_rule: true时,它仅作为激活切换。其他值表示规则参数。 - 规则执行的顺序遵循方案中的顺序,这会影响报告中错误消息的顺序。

- 除非明确说明,否则大多数规则是区分大小写的。

- 作为后备,提供了

regex规则。然而,建议使用清晰的规则组合以提高验证错误的可读性。

以下是规则的详尽列表,每个规则都附有简要说明和示例,以便于理解。本节也通过自动化测试进行验证,确保信息的一致性。

点击查看每条规则的详细信息

# It's a complete example of the CSV schema file in YAML format. # See copy of the file without comments here ./schema-examples/full_clean.yml # Just meta name: CSV Blueprint Schema Example # Name of a CSV file. Not used in the validation process. description: |- # Any description of the CSV file. Not used in the validation process. This YAML file provides a detailed description and validation rules for CSV files to be processed by CSV Blueprint tool. It includes specifications for file name patterns, CSV formatting options, and extensive validation criteria for individual columns and their values, supporting a wide range of data validation rules from basic type checks to complex regex validations. This example serves as a comprehensive guide for creating robust CSV file validations. # Include another schema and define an alias for it. presets: my-preset: ./preset_users.yml # Define preset alias "my-preset". See README.md for details. # Regular expression to match the file name. If not set, then no pattern check. # This allows you to pre-validate the file name before processing its contents. # Feel free to check parent directories as well. # See: https://php.ac.cn/manual/en/reference.pcre.pattern.syntax.php filename_pattern: /\.csv$/i # preset: my-preset # See README.md for details. # Here are default values to parse CSV file. # You can skip this section if you don't need to override the default values. csv: preset: my-preset # See README.md for details. header: true # If the first row is a header. If true, name of each column is required. delimiter: , # Delimiter character in CSV file. quote_char: \ # Quote character in CSV file. enclosure: '"' # Enclosure for each field in CSV file. encoding: utf-8 # (Experimental) Only utf-8, utf-16, utf-32. bom: false # (Experimental) If the file has a BOM (Byte Order Mark) at the beginning. # Structural rules for the CSV file. These rules are applied to the entire CSV file. # They are not(!) related to the data in the columns. # You can skip this section if you don't need to override the default values. structural_rules: # Here are default values. preset: my-preset # See README.md for details. strict_column_order: true # Ensure columns in CSV follow the same order as defined in this YML schema. It works only if "csv.header" is true. allow_extra_columns: false # Allow CSV files to have more columns than specified in this YML schema. # Add any extra data you want. It will be ignored by the tool but available for your own code. # You can use any format and store anything. Examples: # extra: 'some text' # extra: [some, options, here] # extra: 42 extra: - key: "value" # Description of each column in CSV. # It is recommended to present each column in the same order as presented in the CSV file. # This will not affect the validator, but will make it easier for you to navigate. # For convenience, use the first line as a header (if possible). columns: - preset: my-preset/login # Add preset rules for the column. See README.md for details. name: Column Name (header) # Any custom name of the column in the CSV file (first row). Required if "csv.header" is true. description: Lorem ipsum # Description of the column. Not used in the validation process. example: Some example # Example of the column value. Schema will also check this value on its own. # If the column is required. If true, the column must be present in the CSV file. If false, the column can be missing in the CSV file. # So, if you want to make the column optional, set this value to false, and it will validate the column only if it is present. # By default, the column is required. It works only if "csv.header" is true and "structural_rules.allow_extra_columns" is false. required: true # Add any extra data you want. It will be ignored by the tool but available for your own code. # You can use any format and store anything. Examples: # extra: 'some text' # extra: [some, options, here] # extra: 42 extra: - key: "value" #################################################################################################################### # Data validation for each(!) value in the column. Please, see notes in README.md # Every rule is optional. rules: preset: my-preset/login # Add preset rules for the column. See README.md for details. # General rules not_empty: true # Value is not an empty string. Actually checks if the string length is not 0. exact_value: Some string # Exact value for string in the column. allow_values: [ y, n, "" ] # Strict set of values that are allowed. not_allow_values: [ invalid ] # Strict set of values that are NOT allowed. # Any valid regex pattern. See: https://php.ac.cn/manual/en/reference.pcre.pattern.syntax.php # Of course it's a super powerful tool to verify any sort of string data. # Please, be careful. Regex is a powerful tool, but it can be very dangerous if used incorrectly. # Remember that if you want to solve a problem with regex, you now have two problems. # But have it your way, then happy debugging! https://regex101.com regex: /^[\d]{2}$/ # Checks length of a string including spaces (multibyte safe). length_min: 1 # x >= 1 length_greater: 2 # x > 2 length_not: 0 # x != 0 length: 7 # x == 7 length_less: 8 # x < 8 length_max: 9 # x <= 9 # Basic string checks is_trimmed: true # Only trimmed strings. Example: "Hello World" (not " Hello World "). is_lowercase: true # String is only lower-case. Example: "hello world". is_uppercase: true # String is only upper-case. Example: "HELLO WORLD". is_capitalize: true # String is only capitalized. Example: "Hello World". is_sentence: true # Sentence with at least one space. Example: "Hello world!". # Count number of words used in a string # Note that multibyte locales are not supported. # Example: "Hello World, 123" - 2 words only (123 is not a word). word_count_min: 1 # x >= 1 word_count_greater: 2 # x > 2 word_count_not: 0 # x != 0 word_count: 7 # x == 7 word_count_less: 8 # x < 8 word_count_max: 9 # x <= 9 # Contains rules contains: World # Example: "Hello World!". The string must contain "World" in any place. contains_none: [ a, b ] # All the strings must NOT be part of a CSV value. contains_one: [ a, b ] # Only one of the strings must be part of the CSV value. contains_any: [ a, b ] # At least one of the string must be part of the CSV value. contains_all: [ a, b ] # All the strings must be part of a CSV value. starts_with: 'prefix ' # Example: "prefix Hello World". ends_with: ' suffix' # Example: "Hello World suffix". # Numeric is_int: true # Check format only. Can be negative and positive. Without any separators. is_float: true # Check format only. Can be negative and positive. Dot as decimal separator. # Under the hood it converts and compares as float values. # Comparison accuracy is 10 digits after a dot. # Scientific number format is also supported. Example: "1.2e3" num_min: 1.0 # x >= 1.0 num_greater: 2.0 # x > 2.0 num_not: 5.0 # x != 5.0 num: 7.0 # x == 7.0 num_less: 8.0 # x < 8.0 num_max: 9.0 # x <= 9.0 # Number of digits after the decimal point (with zeros) precision_min: 1 # x >= 1 precision_greater: 2 # x > 2 precision_not: 0 # x != 0 precision: 7 # x == 7 precision_less: 8 # x < 8 precision_max: 9 # x <= 9 # Date & time is_date: true # Accepts arbitrary date format. Is shows error if failed to convert to timestamp. is_timezone: true # Allow only timezone identifiers. Case-insensitive. Example: "Europe/London", "utc". is_timezone_offset: true # Allow only timezone offsets. Example: "+03:00". is_time: true # Check if the cell value is a valid time in the format "HH:MM:SS AM/PM" / "HH:MM:SS" / "HH:MM". Case-insensitive. is_leap_year: true # Check if the cell value is a leap year. Example: "2008", "2008-02-29 23:59:59 UTC". # Dates. Under the hood, the strings are converted to timestamp and compared. # This gives you the ability to use relative dates and any formatting you want. # By default, it works in UTC. But you can specify your own timezone as part of the date string. # Format: https://php.ac.cn/manual/en/datetime.format.php # Parsing: https://php.ac.cn/manual/en/function.strtotime.php # Timezones: https://php.ac.cn/manual/en/timezones.php date_min: -100 years # Example of relative past date date_greater: -99 days # Example of relative formats date_not: 2006-01-02 15:04:05 -0700 Europe/Rome date: 01 Jan 2000 # You can use any string that can be parsed by the strtotime function date_less: now # Example of current date and time date_max: +1 day # Example of relative future date date_format: Y-m-d # Check strict format of the date. # Date Intervals. Under the hood, the strings are converted to seconds and compared. # See: https://php.ac.cn/manual/en/class.dateinterval.php # See: https://php.ac.cn/manual/en/dateinterval.createfromdatestring.php date_interval_min: PT0S # 0 seconds date_interval_greater: 1day 1sec # 1 day and 1 second date_interval_not: 100 days # Except for the 100 days date_interval: P2W # Exactly 2 weeks date_interval_less: PT23H59M59S # 23 hours, 59 minutes, and 59 seconds date_interval_max: P1Y # 1 year # Check an arbitrary date in a CSV cell for age (years). # Actually it calculates the difference between the date and the current date. # Convenient to use for age restrictions based on birthday. # See the description of `date_*` functions for details on date formats. date_age_min: 1 # x >= 1 date_age_greater: 14 # x > 14 date_age_not: 18 # x != 18 date_age: 21 # x == 21 date_age_less: 99 # x < 99 date_age_max: 100 # x <= 100 # Specific formats is_bool: true # Allow only boolean values "true" and "false", case-insensitive. is_binary: true # Both: with or without "0b" prefix. Example: "0b10" or "10". is_octal: true # Validates octal numbers in the format "0o123". is_hex: true # Both: with or without "0x" prefix. Example: "0x1A". is_uuid: true # Validates whether the input is a valid UUID. It also supports validation of specific versions 1, 3, 4 and 5. is_slug: true # Only slug format. Example: "my-slug-123". It can contain letters, numbers, and dashes. is_currency_code: true # Validates an ISO 4217 currency code like GBP or EUR. Case-sensitive. See: https://en.wikipedia.org/wiki/ISO_4217. is_base64: true # Validate if a string is Base64-encoded. Example: "cmVzcGVjdCE=". is_angle: true # Check if the cell value is a valid angle (0.0 to 360.0). # Safity checks # Password strength calculation criteria include: Length (max 5 points, +1 every 2 characters), # presence of uppercase letters (+1), lowercase letters (+1), numbers (+1), special characters (+1), # spaces (+1), and penalties for consecutive sequences of uppercase, lowercase, or # numbers (-0.5 each), repetitive sequences (-0.75 each), common weak passwords like "qwerty", # and passwords under 6 characters (-2). Adjust scores to a 0 to 10 scale, with a minimum score of 0. password_strength_min: 1 # x >= 1 password_strength_greater: 2 # x > 2 password_strength_not: 0 # x != 0 password_strength: 7 # x == 7 password_strength_less: 8 # x < 8 password_strength_max: 9 # x <= 9 is_password_safe_chars: true # Check that the cell value contains only safe characters for regular passwords. Allowed characters: a-z, A-Z, 0-9, !@#$%^&*()_+-=[]{};:'"|,.<>/?~. # Internet is_ip: true # Both: IPv4 or IPv6. is_ip_v4: true # Only IPv4. Example: "127.0.0.1". is_ip_v6: true # Only IPv6. Example: "2001:0db8:85a3:08d3:1319:8a2e:0370:7334". is_ip_private: true # IPv4 has ranges: 10.0.0.0/8, 172.16.0.0/12 and 192.168.0.0/16. IPv6 has ranges starting with FD or FC. is_ip_reserved: true # IPv4 has ranges: 0.0.0.0/8, 169.254.0.0/16, 127.0.0.0/8 and 240.0.0.0/4. IPv6 has ranges: ::1/128, ::/128, ::ffff:0:0/96 and fe80::/10. ip_v4_range: [ '127.0.0.1-127.0.0.5', '127.0.0.0/21' ] # Check subnet mask or range for IPv4. Address must be in one of the ranges. is_mac_address: true # The input is a valid MAC address. Example: 00:00:5e:00:53:01 is_domain: true # Only domain name. Example: "example.com". is_public_domain_suffix: true # The input is a public ICANN domain suffix. Example: "com", "nom.br", "net" etc. is_url: true # Only URL format. Example: "https://example.com/page?query=string#anchor". is_email: true # Only email format. Example: "user@example.com". # Validates if the given input is a valid JSON. # This is possible if you escape all special characters correctly and use a special CSV format. is_json: true # Example: {"foo":"bar"}. # Geography is_latitude: true # Can be integer or float. Example: 50.123456. is_longitude: true # Can be integer or float. Example: -89.123456. is_geohash: true # Check if the value is a valid geohash. Example: "u4pruydqqvj". is_cardinal_direction: true # Valid cardinal direction. Case-insensitive. Available values: ["N", "S", "E", "W", "NE", "SE", "NW", "SW", "NONE"] is_usa_market_name: true # Check if the value is a valid USA market name. Example: "New York, NY". is_usa_state: true # Name or code of USA state name. Case-insensitive. Example: "CA" or "California". # Validates whether the input is a country code in ISO 3166-1 standard. # Available options: "alpha-2" (Ex: "US"), "alpha-3" (Ex: "USA"), "numeric" (Ex: "840"). # The rule uses data from iso-codes: https://salsa.debian.org/iso-codes-team/iso-codes. country_code: alpha-2 # Country code in ISO 3166-1 standard. Examples: "US", "USA", "840" # Validates whether the input is language code based on ISO 639. # Available options: "alpha-2" (Ex: "en"), "alpha-3" (Ex: "eng"). # See: https://en.wikipedia.org/wiki/ISO_639. language_code: alpha-2 # Examples: "en", "eng" # Filesystem (with IO!) is_file_exists: true # Check if file exists on the filesystem (It's FS IO operation!). is_dir_exists: true # Check if directory exists on the filesystem (It's FS IO operation!). # Mathematical is_fibonacci: true # Validates whether the input follows the Fibonacci integer sequence. Example: "8", "13". is_prime_number: true # Validates a prime number. Example: "3", "5", "7", "11". is_even: true # Check if the value is an even number. Example: "2", "4", "6". is_odd: true # Check if the value is an odd number. Example: "1", "7", "11". is_roman: true # Validates if the input is a Roman numeral. Example: "I", "IV", "XX". is_luhn: true # Luhn algorithm. See: https://en.wikipedia.org/wiki/Luhn_algorithm # Identifications phone: ALL # Validates if the input is a phone number. Specify the country code to validate the phone number for a specific country. Example: "ALL", "US", "BR".". postal_code: US # Validate postal code by country code (alpha-2). Example: "02179". Extracted from https://www.geonames.org is_iban: true # IBAN - International Bank Account Number. See: https://en.wikipedia.org/wiki/International_Bank_Account_Number is_bic: true # Validates a Bank Identifier Code (BIC) according to ISO 9362 standards. See: https://en.wikipedia.org/wiki/ISO_9362 is_imei: true # Validates an International Mobile Equipment Identity (IMEI). See: https://en.wikipedia.org/wiki/International_Mobile_Station_Equipment_Identity is_isbn: true # Validates an International Standard Book Number (ISBN). See: https://www.isbn-international.org/content/what-isbn # Misc is_version: true # Validates the string as version numbers using Semantic Versioning. Example: "1.2.3". is_punct: true # Validates whether the input composed by only punctuation characters. Example: "!@#$%^&*()". is_vowel: true # Validates whether the input contains only vowels. Example: "aei". is_consonant: true # Validates if the input contains only consonants. Example: "bcd". is_alnum: true # Validates whether the input is only alphanumeric. Example: "aBc123". is_alpha: true # This is similar to `is_alnum`, but it does not allow numbers. Example: "aBc". is_hex_rgb_color: true # Validates weather the input is a hex RGB color or not. Examples: "#FF0000", "#123", "ffffff", "fff". # Check if the value is a valid hash. Supported algorithms: # - md5, md4, md2, sha1, sha224, sha256, sha384, sha512/224, sha512/256, sha512 # - sha3-224, sha3-256, sha3-384, sha3-512, ripemd128, ripemd160, ripemd256, ripemd320, whirlpool, tiger128,3 # - tiger160,3, tiger192,3, tiger128,4, tiger160,4, tiger192,4, snefru, snefru256, gost, gost-crypto, crc32 # - crc32b, crc32c, adler32, fnv132, fnv1a32, fnv164, fnv1a64, joaat, murmur3a, murmur3c # - murmur3f, xxh32, xxh64, xxh3, xxh128, haval128,3, haval160,3, haval192,3, haval224,3, haval256,3 # - haval128,4, haval160,4, haval192,4, haval224,4, haval256,4, haval128,5, haval160,5, haval192,5, haval224,5, haval256,5 hash: set_algo # Example: "1234567890abcdef". # Check if a string is in a specific charset. Available charsets: # - 7bit, 8bit, ASCII, ArmSCII-8, BASE64, BIG-5, CP850, CP866, CP932, CP936 # - CP950, CP50220, CP50221, CP50222, CP51932, EUC-CN, EUC-JP, EUC-JP-2004, EUC-KR, EUC-TW # - GB18030, HTML-ENTITIES, HZ, ISO-2022-JP, ISO-2022-JP-2004, ISO-2022-JP-MOBILE#KDDI, ISO-2022-JP-MS, ISO-2022-KR, ISO-8859-1, ISO-8859-2 # - ISO-8859-3, ISO-8859-4, ISO-8859-5, ISO-8859-6, ISO-8859-7, ISO-8859-8, ISO-8859-9, ISO-8859-10, ISO-8859-13, ISO-8859-14 # - ISO-8859-15, ISO-8859-16, JIS, KOI8-R, KOI8-U, Quoted-Printable, SJIS, SJIS-2004, SJIS-Mobile#DOCOMO, SJIS-Mobile#KDDI # - SJIS-Mobile#SOFTBANK, SJIS-mac, SJIS-win, UCS-2, UCS-2BE, UCS-2LE, UCS-4, UCS-4BE, UCS-4LE, UHC # - UTF-7, UTF-8, UTF-8-Mobile#DOCOMO, UTF-8-Mobile#KDDI-A, UTF-8-Mobile#KDDI-B, UTF-8-Mobile#SOFTBANK, UTF-16, UTF-16BE, UTF-16LE, UTF-32 # - UTF-32BE, UTF-32LE, UTF7-IMAP, UUENCODE, Windows-1251, Windows-1252, Windows-1254, eucJP-win charset: charset_code # Validates if a string is in a specific charset. Example: "UTF-8". # Validates whether the input is a credit card number. # Available credit card brands: "Any", "American Express", "Diners Club", "Discover", "JCB", "MasterCard", "Visa", "RuPay". credit_card: Any # Example: "5376-7473-9720-8720" #################################################################################################################### # Data validation for the entire(!) column using different data aggregation methods. # Every rule is optional. aggregate_rules: preset: my-preset/login # Add preset aggregate rules for the column. See README.md for details. is_unique: true # All values in the column are unique. # Check if the column is sorted in a specific order. # - Direction: ["asc", "desc"]. # - Method: ["numeric", "string", "natural", "regular"]. # See: https://php.ac.cn/manual/en/function.sort.php sorted: [ asc, natural ] # Expected ascending order, natural sorting. # First number in the column. Expected value is float or integer. first_num_min: 1.0 # x >= 1.0 first_num_greater: 2.0 # x > 2.0 first_num_not: 5.0 # x != 5.0 first_num: 7.0 # x == 7.0 first_num_less: 8.0 # x < 8.0 first_num_max: 9.0 # x <= 9.0 first: Expected # First value in the column. Will be compared as strings. first_not: Not expected # Not allowed as the first value in the column. Will be compared as strings. # N-th value in the column. # The rule expects exactly two arguments: the first is the line number (without header), the second is the expected value. # Example: `[ 42, 5.0 ]` On the line 42 (disregarding the header), we expect the 5.0. The comparison is always as float. nth_num_min: [ 42, 1.0 ] # x >= 1.0 nth_num_greater: [ 42, 2.0 ] # x > 2.0 nth_num_not: [ 42, 5.0 ] # x != 5.0 nth_num: [ 42, 7.0 ] # x == 7.0 nth_num_less: [ 42, 8.0 ] # x < 8.0 nth_num_max: [ 42, 9.0 ] # x <= 9.0 nth: [ 2, Expected ] # Nth value in the column. Will be compared as strings. nth_not: [ 2, Not expected ] # Not allowed as the N-th value in the column. Will be compared as strings. # Last number in the column. Expected value is float or integer. last_num_min: 1.0 # x >= 1.0 last_num_greater: 2.0 # x > 2.0 last_num_not: 5.0 # x != 5.0 last_num: 7.0 # x == 7.0 last_num_less: 8.0 # x < 8.0 last_num_max: 9.0 # x <= 9.0 last: Expected # Last value in the column. Will be compared as strings. last_not: Not expected # Not allowed as the last value in the column. Will be compared as strings. # Sum of the numbers in the column. Example: [1, 2, 3] => 6. sum_min: 1.0 # x >= 1.0 sum_greater: 2.0 # x > 2.0 sum_not: 5.0 # x != 5.0 sum: 7.0 # x == 7.0 sum_less: 8.0 # x < 8.0 sum_max: 9.0 # x <= 9.0 # Regular the arithmetic mean. The sum of the numbers divided by the count. average_min: 1.0 # x >= 1.0 average_greater: 2.0 # x > 2.0 average_not: 5.0 # x != 5.0 average: 7.0 # x == 7.0 average_less: 8.0 # x < 8.0 average_max: 9.0 # x <= 9.0 # Total number of rows in the CSV file. # Since any(!) values are taken into account, it only makes sense to use these rules once in any column. count_min: 1 # x >= 1 count_greater: 2 # x > 2 count_not: 0 # x != 0 count: 7 # x == 7 count_less: 8 # x < 8 count_max: 9 # x <= 9 # Counts only empty values (string length is 0). count_empty_min: 1 # x >= 1 count_empty_greater: 2 # x > 2 count_empty_not: 0 # x != 0 count_empty: 7 # x == 7 count_empty_less: 8 # x < 8 count_empty_max: 9 # x <= 9 # Counts only not empty values (string length is not 0). count_not_empty_min: 1 # x >= 1 count_not_empty_greater: 2 # x > 2 count_not_empty_not: 0 # x != 0 count_not_empty: 7 # x == 7 count_not_empty_less: 8 # x < 8 count_not_empty_max: 9 # x <= 9 # Number of unique values. count_distinct_min: 1 # x >= 1 count_distinct_greater: 2 # x > 2 count_distinct_not: 0 # x != 0 count_distinct: 7 # x == 7 count_distinct_less: 8 # x < 8 count_distinct_max: 9 # x <= 9 # Number of positive values. count_positive_min: 1 # x >= 1 count_positive_greater: 2 # x > 2 count_positive_not: 0 # x != 0 count_positive: 7 # x == 7 count_positive_less: 8 # x < 8 count_positive_max: 9 # x <= 9 # Number of negative values. count_negative_min: 1 # x >= 1 count_negative_greater: 2 # x > 2 count_negative_not: 0 # x != 0 count_negative: 7 # x == 7 count_negative_less: 8 # x < 8 count_negative_max: 9 # x <= 9 # Number of zero values. Any text and spaces (i.e. anything that doesn't look like a number) will be converted to 0. count_zero_min: 1 # x >= 1 count_zero_greater: 2 # x > 2 count_zero_not: 0 # x != 0 count_zero: 7 # x == 7 count_zero_less: 8 # x < 8 count_zero_max: 9 # x <= 9 # Number of even values. count_even_min: 1 # x >= 1 count_even_greater: 2 # x > 2 count_even_not: 0 # x != 0 count_even: 7 # x == 7 count_even_less: 8 # x < 8 count_even_max: 9 # x <= 9 # Number of odd values. count_odd_min: 1 # x >= 1 count_odd_greater: 2 # x > 2 count_odd_not: 0 # x != 0 count_odd: 7 # x == 7 count_odd_less: 8 # x < 8 count_odd_max: 9 # x <= 9 # Number of prime values. count_prime_min: 1 # x >= 1 count_prime_greater: 2 # x > 2 count_prime_not: 0 # x != 0 count_prime: 7 # x == 7 count_prime_less: 8 # x < 8 count_prime_max: 9 # x <= 9 # Calculate the median average of a list of numbers. # See: https://en.wikipedia.org/wiki/Median median_min: 1.0 # x >= 1.0 median_greater: 2.0 # x > 2.0 median_not: 5.0 # x != 5.0 median: 7.0 # x == 7.0 median_less: 8.0 # x < 8.0 median_max: 9.0 # x <= 9.0 # Harmonic mean (subcontrary mean). The harmonic mean can be expressed as the reciprocal of the arithmetic mean of the reciprocals. # Appropriate for situations when the average of rates is desired. # See: https://en.wikipedia.org/wiki/Harmonic_mean harmonic_mean_min: 1.0 # x >= 1.0 harmonic_mean_greater: 2.0 # x > 2.0 harmonic_mean_not: 5.0 # x != 5.0 harmonic_mean: 7.0 # x == 7.0 harmonic_mean_less: 8.0 # x < 8.0 harmonic_mean_max: 9.0 # x <= 9.0 # Geometric mean. A type of mean which indicates the central tendency or typical value of a set of numbers # by using the product of their values (as opposed to the arithmetic mean which uses their sum). # See: https://en.wikipedia.org/wiki/Geometric_mean geometric_mean_min: 1.0 # x >= 1.0 geometric_mean_greater: 2.0 # x > 2.0 geometric_mean_not: 5.0 # x != 5.0 geometric_mean: 7.0 # x == 7.0 geometric_mean_less: 8.0 # x < 8.0 geometric_mean_max: 9.0 # x <= 9.0 # Contraharmonic mean. A function complementary to the harmonic mean. A special case of the Lehmer mean, L₂(x), where p = 2. # See: https://en.wikipedia.org/wiki/Contraharmonic_mean contraharmonic_mean_min: 1.0 # x >= 1.0 contraharmonic_mean_greater: 2.0 # x > 2.0 contraharmonic_mean_not: 5.0 # x != 5.0 contraharmonic_mean: 7.0 # x == 7.0 contraharmonic_mean_less: 8.0 # x < 8.0 contraharmonic_mean_max: 9.0 # x <= 9.0 # Root mean square (quadratic mean) The square root of the arithmetic mean of the squares of a set of numbers. # See: https://en.wikipedia.org/wiki/Root_mean_square root_mean_square_min: 1.0 # x >= 1.0 root_mean_square_greater: 2.0 # x > 2.0 root_mean_square_not: 5.0 # x != 5.0 root_mean_square: 7.0 # x == 7.0 root_mean_square_less: 8.0 # x < 8.0 root_mean_square_max: 9.0 # x <= 9.0 # Trimean (TM, or Tukey's trimean). # A measure of a probability distribution's location defined as a weighted average of the distribution's median and its two quartiles. # See: https://en.wikipedia.org/wiki/Trimean trimean_min: 1.0 # x >= 1.0 trimean_greater: 2.0 # x > 2.0 trimean_not: 5.0 # x != 5.0 trimean: 7.0 # x == 7.0 trimean_less: 8.0 # x < 8.0 trimean_max: 9.0 # x <= 9.0 # Cubic mean. See: https://en.wikipedia.org/wiki/Cubic_mean cubic_mean_min: 1.0 # x >= 1.0 cubic_mean_greater: 2.0 # x > 2.0 cubic_mean_not: 5.0 # x != 5.0 cubic_mean: 7.0 # x == 7.0 cubic_mean_less: 8.0 # x < 8.0 cubic_mean_max: 9.0 # x <= 9.0 # Compute the P-th percentile of a list of numbers. # Linear interpolation between closest ranks method - Second variant, C = 1 P-th percentile (0 <= P <= 100) of a list of N ordered values (sorted from least to greatest). # Similar method used in NumPy and Excel. # See: https://en.wikipedia.org/wiki/Percentile#Second_variant.2C_.7F.27.22.60UNIQ--postMath-00000043-QINU.60.22.27.7F # Example: `[ 95.5, 1.234 ]` The 95.5th percentile in the column must be "1.234" (float). percentile_min: [ 95.0, 1.0 ] # x >= 1.0 percentile_greater: [ 95.0, 2.0 ] # x > 2.0 percentile_not: [ 95.0, 5.0 ] # x != 5.0 percentile: [ 95.0, 7.0 ] # x == 7.0 percentile_less: [ 95.0, 8.0 ] # x < 8.0 percentile_max: [ 95.0, 9.0 ] # x <= 9.0 # Quartiles. Three points that divide the data set into four equal groups, each group comprising a quarter of the data. # See: https://en.wikipedia.org/wiki/Quartile # There are multiple methods for computing quartiles: ["exclusive", "inclusive"]. Exclusive is ussually classic. # Available types: ["0%", "Q1", "Q2", "Q3", "100%", "IQR"] ("IQR" is Interquartile Range) # Example: `[ inclusive, 'Q3', 42.0 ]` - the Q3 inclusive quartile is 42.0 quartiles_min: [ exclusive, '0%', 1.0 ] # x >= 1.0 quartiles_greater: [ inclusive, 'Q1', 2.0 ] # x > 2.0 quartiles_not: [ exclusive, 'Q2', 5.0 ] # x != 5.0 quartiles: [ inclusive, 'Q3', 7.0 ] # x == 7.0 quartiles_less: [ exclusive, '100%', 8.0 ] # x < 8.0 quartiles_max: [ inclusive, 'IQR', 9.0 ] # x <= 9.0 # Midhinge. The average of the first and third quartiles and is thus a measure of location. # Equivalently, it is the 25% trimmed mid-range or 25% midsummary; it is an L-estimator. # See: https://en.wikipedia.org/wiki/Midhinge # Midhinge = (first quartile, third quartile) / 2 midhinge_min: 1.0 # x >= 1.0 midhinge_greater: 2.0 # x > 2.0 midhinge_not: 5.0 # x != 5.0 midhinge: 7.0 # x == 7.0 midhinge_less: 8.0 # x < 8.0 midhinge_max: 9.0 # x <= 9.0 # MAD - mean absolute deviation. The average of the absolute deviations from a central point. # It is a summary statistic of statistical dispersion or variability. # See: https://en.wikipedia.org/wiki/Average_absolute_deviation mean_abs_dev_min: 1.0 # x >= 1.0 mean_abs_dev_greater: 2.0 # x > 2.0 mean_abs_dev_not: 5.0 # x != 5.0 mean_abs_dev: 7.0 # x == 7.0 mean_abs_dev_less: 8.0 # x < 8.0 mean_abs_dev_max: 9.0 # x <= 9.0 # MAD - median absolute deviation. The average of the absolute deviations from a central point. # It is a summary statistic of statistical dispersion or variability. # It is a robust measure of the variability of a univariate sample of quantitative data. # See: https://en.wikipedia.org/wiki/Median_absolute_deviation median_abs_dev_min: 1.0 # x >= 1.0 median_abs_dev_greater: 2.0 # x > 2.0 median_abs_dev_not: 5.0 # x != 5.0 median_abs_dev: 7.0 # x == 7.0 median_abs_dev_less: 8.0 # x < 8.0 median_abs_dev_max: 9.0 # x <= 9.0 # Population variance - Use when all possible observations of the system are present. # If used with a subset of data (sample variance), it will be a biased variance. # n degrees of freedom, where n is the number of observations. population_variance_min: 1.0 # x >= 1.0 population_variance_greater: 2.0 # x > 2.0 population_variance_not: 5.0 # x != 5.0 population_variance: 7.0 # x == 7.0 population_variance_less: 8.0 # x < 8.0 population_variance_max: 9.0 # x <= 9.0 # Unbiased sample variance Use when only a subset of all possible observations of the system are present. # n - 1 degrees of freedom, where n is the number of observations. sample_variance_min: 1.0 # x >= 1.0 sample_variance_greater: 2.0 # x > 2.0 sample_variance_not: 5.0 # x != 5.0 sample_variance: 7.0 # x == 7.0 sample_variance_less: 8.0 # x < 8.0 sample_variance_max: 9.0 # x <= 9.0 # Standard deviation (For a sample; uses sample variance). It also known as SD or StdDev. # StdDev is a measure that is used to quantify the amount of variation or dispersion of a set of data values. # - Low standard deviation indicates that the data points tend to be close to the mean (also called the expected value) of the set. # - High standard deviation indicates that the data points are spread out over a wider range of values. # See: https://en.wikipedia.org/wiki/Standard_deviation stddev_min: 1.0 # x >= 1.0 stddev_greater: 2.0 # x > 2.0 stddev_not: 5.0 # x != 5.0 stddev: 7.0 # x == 7.0 stddev_less: 8.0 # x < 8.0 stddev_max: 9.0 # x <= 9.0 # SD+ (Standard deviation for a population; uses population variance) stddev_pop_min: 1.0 # x >= 1.0 stddev_pop_greater: 2.0 # x > 2.0 stddev_pop_not: 5.0 # x != 5.0 stddev_pop: 7.0 # x == 7.0 stddev_pop_less: 8.0 # x < 8.0 stddev_pop_max: 9.0 # x <= 9.0 # Coefficient of variation (cᵥ) Also known as relative standard deviation (RSD) # A standardized measure of dispersion of a probability distribution or frequency distribution. # It is often expressed as a percentage. The ratio of the standard deviation to the mean. # See: https://en.wikipedia.org/wiki/Coefficient_of_variation coef_of_var_min: 1.0 # x >= 1.0 coef_of_var_greater: 2.0 # x > 2.0 coef_of_var_not: 5.0 # x != 5.0 coef_of_var: 7.0 # x == 7.0 coef_of_var_less: 8.0 # x < 8.0 coef_of_var_max: 9.0 # x <= 9.0 # Interquartile mean (IQM). A measure of central tendency based on the truncated mean of the interquartile range. # Only the data in the second and third quartiles is used (as in the interquartile range), and the lowest 25% and the highest 25% of the scores are discarded. # See: https://en.wikipedia.org/wiki/Interquartile_mean # Note: It's SUPER slow!!! interquartile_mean_min: 1.0 # x >= 1.0 interquartile_mean_greater: 2.0 # x > 2.0 interquartile_mean_not: 5.0 # x != 5.0 interquartile_mean: 7.0 # x == 7.0 interquartile_mean_less: 8.0 # x < 8.0 interquartile_mean_max: 9.0 # x <= 9.0 - name: another_column rules: not_empty: true - name: inherited_column_login preset: my-preset/login - name: inherited_column_full_name preset: my-preset/full_name

额外检查

除了YAML配置中概述的架构外,该工具默认执行多个底层检查,以确保对您的CSV文件进行彻底验证。

filename_pattern规则验证文件名是否符合指定的正则表达式模式,确保遵循文件命名规范。- 确保每个列的

name属性已定义,仅在csv.header设置为true时适用,以保证头部的完整性。 required属性,当设置为true时,要求CSV文件中存在指定的列,增强数据的完整性。这仅在csv.header为true时相关。- 验证每行是否包含正确数量的列,与架构定义的结构相匹配,以防止数据错位。

strict_column_order规则检查列的顺序是否与架构中定义的一致,确保结构的一致性。allow_extra_columns规则断言CSV文件中不包含架构中指定的列之外的额外列,保持数据的高度一致性。- 对于

csv.header: true,它会检查架构中是否包含CSV文件中找不到的任何name列,以解决头部差异。 - 对于

csv.header: false,它会比较架构中的列数与CSV文件中的列数,以确保架构的一致性。

- 对于

预设和可重复使用的模式

预设显著提高了CSV文件验证中架构定义的效率和可重用性,通过确保具有常见验证规则(如用户ID和电子邮件地址)的各种文件的一致性。

这种一致性维护了数据的完整性,并简化了维护工作,通过允许集中更新,这些更新会自动应用于所有相关联的架构。此外,预设支持通过字段特定的规则覆盖进行自定义,从而促进标准化和满足特定需求。

专注于你的任务,而不是复制粘贴。

带有预设的示例

让我们看一个现实生活中的例子。假设你有一个“库”不同用户配置文件验证规则,可以用于各种CSV文件。

为了避免关心完整性,并避免复制粘贴,你可以重用任何现有架构。事实上,这可以被认为是部分继承。

重要提示

- 你可以使继承链无限长。即形式为

grant-parent.yml->parent.yml->child.yml->grandchild.yml-> 等。当然,如果你喜欢冒险 ;). - 但要注意循环依赖。工具将无法处理循环依赖,它可能导致无限循环。

- 任何(!)架构文件都可以单独使用或作为库使用。语法是相同的。

- 具有预设的架构会自动验证自身,如果存在任何明显问题,你将在尝试使用架构时看到它们。但是,规则之间的逻辑冲突不会被检查(从代码的角度来看几乎是不可能的)。如上所述,规则是独立工作的,彼此之间没有意识。因此,规则集合始终是你的责任。

- 预设中的别名必须匹配正则表达式模式

/^[a-z0-9-_]+$/i。否则,可能会破坏语法。

如果出现错误

如果你在使用预设时遇到问题,不理解CSV蓝图底层如何理解它,只需添加 --dump-schema 即可查看它。还有单独的CLI命令用于转储架构。

./csv-blueprint debug-schema -s ./your/schema.yml

让我们看看代码中这看起来是什么样子。

- 为数据库列定义一些基本规则。

- 其中一些文件将包含仅针对用户配置文件特定的规则。

- 当然,让我们制作一个可以同时重用这两个文件规则的模式。

因此,你不仅得到了一些用于验证的模式,这很难管理,而且还得到了一个针对你项目具体情况的框架(!),特别是当你有数十个甚至数百个CSV文件和规则时。这将更容易实现一致性。这通常非常重要。

点击查看源代码

name: Presets for database columns description: This schema contains basic rules for database user data. columns: - name: id description: Unique identifier, usually used to denote a primary key in databases. example: 12345 extra: custom_key: custom value rules: not_empty: true is_trimmed: true is_int: true num_min: 1 aggregate_rules: is_unique: true sorted: [ asc, numeric ] - name: status description: Status in database example: active rules: not_empty: true allow_values: [ active, inactive, pending, deleted ]

点击查看源代码

name: Common presets for user data description: > This schema contains common presets for user data. It can be used as a base for other schemas. filename_pattern: /users-.*\.csv$/i csv: delimiter: ';' columns: - name: login description: User's login name example: johndoe rules: not_empty: true is_trimmed: true is_lowercase: true is_slug: true length_min: 3 length_max: 20 is_alnum: true aggregate_rules: is_unique: true - name: password description: User's password example: '9RfzENKD' rules: not_empty: true is_trimmed: true is_password_safe_chars: true password_strength_min: 7 contains_none: [ "password", "123456", "qwerty", " " ] charset: UTF-8 length_min: 6 length_max: 20 - name: full_name description: User's full name example: 'John Doe Smith' rules: not_empty: true is_trimmed: true charset: UTF-8 contains: " " word_count_min: 2 word_count_max: 8 is_capitalize: true aggregate_rules: is_unique: true - name: email description: User's email address example: user@example.com rules: not_empty: true is_trimmed: true is_email: true is_lowercase: true aggregate_rules: is_unique: true - name: birthday description: Validates the user's birthday. example: '1990-01-01' rules: not_empty: true # The birthday field must not be empty. is_trimmed: true # Trims the value before validation. date_format: Y-m-d # Checks if the date matches the YYYY-MM-DD format. is_date: true # Validates if the value is a valid date. date_age_greater: 0 # Ensures the date is in the past. date_age_less: 150 # Ensures the user is not older than 150 years. date_max: now # Ensures the date is not in the future. - name: phone_number description: User's phone number in US example: '+1 650 253 00 00' rules: not_empty: true is_trimmed: true starts_with: '+1' phone: US - name: balance description: User's balance in USD example: '100.00' rules: not_empty: true is_trimmed: true is_float: true num_min: 0.00 num_max: 1000000000.00 # 1 billion is max amount in our system. precision: 2 - name: short_description description: A brief description of the item example: 'Lorem ipsum dolor sit amet' rules: not_empty: true contains: " " length_max: 255 is_trimmed: true

预设使用方法这个简短清晰的Yaml文件内部大致如下。如你所见,它大大简化了你的工作。

name: Schema uses presets and add new columns + specific rules. description: This schema uses presets. Also, it demonstrates how to override preset values. presets: # Include any other schemas and defined for each alias. users: ./preset_users.yml # Include the schema with common user data. db: ./preset_database.yml # Include the schema with basic database columns. csv: preset: users # Take the CSV settings from the preset. enclosure: '|' # Overridden enclosure only for this schema. columns: # Grap only needed columns from the preset in specific order. - preset: db/id - preset: db/status - preset: users/login - preset: users/email - preset: users/full_name - preset: users/birthday - preset: users/phone_number # Rename the column. "phone_number" => "phone". name: phone - preset: users/password # Overridden value to force a strong password. rules: { length_min: 10 } - name: admin_note # New column specific only this schema. description: Admin note rules: not_empty: true length_min: 1 length_max: 10 aggregate_rules: # In practice this will be a rare case, but the opportunity is there. preset: db/id # Take only aggregate rules from the preset. is_unique: true # Added new specific aggregate rule.

点击查看它在内存中的样子。

# Schema file is "./schema-examples/preset_usage.yml" name: 'Schema uses presets and add new columns + specific rules.' description: 'This schema uses presets. Also, it demonstrates how to override preset values.' presets: users: ./schema-examples/preset_users.yml db: ./schema-examples/preset_database.yml csv: delimiter: ; enclosure: '|' columns: - name: id description: 'Unique identifier, usually used to denote a primary key in databases.' example: 12345 extra: custom_key: 'custom value' rules: not_empty: true is_trimmed: true is_int: true num_min: 1 aggregate_rules: is_unique: true sorted: - asc - numeric - name: status description: 'Status in database' example: active rules: not_empty: true allow_values: - active - inactive - pending - deleted - name: login description: "User's login name" example: johndoe rules: not_empty: true length_min: 3 length_max: 20 is_trimmed: true is_lowercase: true is_slug: true is_alnum: true aggregate_rules: is_unique: true - name: email description: "User's email address" example: user@example.com rules: not_empty: true is_trimmed: true is_lowercase: true is_email: true aggregate_rules: is_unique: true - name: full_name description: "User's full name" example: 'John Doe Smith' rules: not_empty: true is_trimmed: true is_capitalize: true word_count_min: 2 word_count_max: 8 contains: ' ' charset: UTF-8 aggregate_rules: is_unique: true - name: birthday description: "Validates the user's birthday." example: '1990-01-01' rules: not_empty: true is_trimmed: true is_date: true date_max: now date_format: Y-m-d date_age_greater: 0 date_age_less: 150 - name: phone description: "User's phone number in US" example: '+1 650 253 00 00' rules: not_empty: true is_trimmed: true starts_with: '+1' phone: US - name: password description: "User's password" example: 9RfzENKD rules: not_empty: true length_min: 10 length_max: 20 is_trimmed: true contains_none: - password - '123456' - qwerty - ' ' password_strength_min: 7 is_password_safe_chars: true charset: UTF-8 - name: admin_note description: 'Admin note' rules: not_empty: true length_min: 1 length_max: 10 aggregate_rules: is_unique: true sorted: - asc - numeric

因此,可读性和可维护性变得非常容易。你可以轻松添加新规则,更改现有规则等。

包含所有可用语法的完整示例

点击查看可用语法。

name: Complite list of preset features description: This schema contains all the features of the presets. presets: # The basepath for the preset is `.` (current directory of the current schema file). # Define alias "db" for schema in `./preset_database.yml`. db: preset_database.yml # Or `db: ./preset_database.yml`. It's up to you. # For example, you can use a relative path. users: ./../schema-examples/preset_users.yml # Or you can use an absolute path. # db: /full/path/preset_database.yml filename_pattern: { preset: users } # Take the filename pattern from the preset. structural_rules: { preset: users } # Take the global rules from the preset. csv: { preset: users } # Take the CSV settings from the preset. columns: # Use name of column from the preset. # "db" is alias. "id" is column `name` in `preset_database.yml`. - preset: 'db/id' # Use column index. "db" is alias. "0" is column index in `preset_database.yml`. - preset: 'db/0' - preset: 'db/0:' # Use column index and column name. It useful if column name is not unique. - preset: 'db/0:id' # Use only `rules` of "status" column from the preset. - name: My column rules: preset: 'db/status' # Override only `aggregate_rules` from the preset. # Use only `aggregate_rules` of "id" column from the preset. # We strictly take only the very first column (index = 0). - name: My column aggregate_rules: preset: 'db/0:id' # Combo!!! If you're a risk-taker or have a high level of inner zen. :) # Creating a column from three other columns. # In fact, it will merge all three at once with key replacement. - name: Crazy combo! description: > # Just a great advice. I like to take risks, too. Be careful. Use your power wisely. example: ~ # Ignore inherited "example" value. Set it `null`. preset: 'users/login' rules: preset: 'users/email' not_empty: true # Disable the rule from the preset. aggregate_rules: preset: 'db/0'

注意:所有提供的YAML示例都通过内置验证,但它们可能没有实际意义。这些仅供演示,以说明潜在的配置和功能。

并行处理

有--parallel选项可用于通过有效利用更多CPU资源来加快CSV文件验证的速度。

关键点

- 实验性功能:此功能目前处于实验阶段,需要进一步的调试和测试。尽管它在合成自动测试和基准测试中表现良好。需要更多实际用例来验证其稳定性。

- 用例:如果你正在处理数十个CSV文件,每个文件处理时间超过1秒,此选项很有益。

- 默认行为:如果你使用

--parallel而没有指定值,则默认使用可用的最大CPU核心数。 - 线程池大小:你可以为池设置特定数量的线程。例如,

--parallel=10将线程池大小设置为10。指定超过CPU逻辑核心数量的值没有太大意义。否则,它只会因为多线程处理带来的系统开销而稍微减慢速度。 - 禁用并行处理:使用

--parallel=1禁用并行处理,这是未指定选项时的默认设置。 - 实现:该功能依赖于

ext-parallelPHP扩展,该扩展允许创建轻量级线程而不是进程。此扩展已包含在我们的Docker镜像中。如果你没有使用我们的Docker镜像,请确保已安装ext-parallel扩展。此扩展对于并行处理功能的运行至关重要。如果未安装扩展,应用程序始终以单线程模式运行。

完整的CLI帮助信息

本节概述了工具提供的所有可用选项和命令,利用JBZoo/Cli包进行CLI。该工具提供了一套全面的选项,以满足各种需求和场景,确保在CSV文件验证和处理中的灵活性和效率。

有关每个命令和选项的详细信息,请参阅JBZoo/Cli文档。此资源提供了有关CLI命令的功能和应用见解,帮助用户充分利用工具的功能。

./csv-blueprint validate-csv --help

点击查看validate-csv帮助信息

Description:

Validate CSV file(s) by schema(s).

Usage:

validate-csv [options]

validate:csv

Options:

-c, --csv=CSV Specify the path(s) to the CSV files you want to validate.

This can include a direct path to a file or a directory to search with a maximum depth of 10 levels.

Examples: p/file.csv; p/*.csv; p/**/*.csv; p/**/name-*.csv; **/*.csv

(multiple values allowed)

-s, --schema=SCHEMA Specify the path(s) to the schema file(s), supporting YAML, JSON, or PHP formats.

Similar to CSV paths, you can direct to specific files or search directories with glob patterns.

Examples: p/file.yml; p/*.yml; p/**/*.yml; p/**/name-*.yml; **/*.yml

(multiple values allowed)

-S, --skip-schema[=SKIP-SCHEMA] Skips schema validation for quicker checks when the schema's correctness is certain.

Use any non-empty value or "yes" to activate

[default: "no"]

-a, --apply-all[=APPLY-ALL] Apply all schemas (also without `filename_pattern`) to all CSV files found as global rules.

Available options:

- auto: If no glob pattern (*) is used for --schema, the schema is applied to all found CSV files.

- yes|y|1: Apply all schemas to all CSV files, Schemas without `filename_pattern` are applied as a global rule.

- no|n|0: Apply only schemas with not empty `filename_pattern` and match the CSV files.

Note. If specify the option `--apply-all` without value, it will be treated as "yes".

[default: "auto"]

-Q, --quick[=QUICK] Stops the validation process upon encountering the first error,

accelerating the check but limiting error visibility.

Returns a non-zero exit code if any error is detected.

Enable by setting to any non-empty value or "yes".

[default: "no"]

-r, --report=REPORT Determines the report's output format.

Available options: text, table, github, gitlab, teamcity, junit

[default: "table"]

--dump-schema Dumps the schema of the CSV file if you want to see the final schema after inheritance.

--debug Intended solely for debugging and advanced profiling purposes.

Activating this option provides detailed process insights,

useful for troubleshooting and performance analysis.

--parallel[=PARALLEL] EXPERIMENTAL! Launches the process in parallel mode (if possible). Works only with ext-parallel.

You can specify the number of threads.

If you do not specify a value, the number of threads will be equal to the number of CPU cores.

By default, the process is launched in a single-threaded mode. [default: "1"]

--no-progress Disable progress bar animation for logs. It will be used only for text output format.

--mute-errors Mute any sort of errors. So exit code will be always "0" (if it's possible).

It has major priority then --non-zero-on-error. It's on your own risk!

--stdout-only For any errors messages application will use StdOut instead of StdErr. It's on your own risk!

--non-zero-on-error None-zero exit code on any StdErr message.

--timestamp Show timestamp at the beginning of each message.It will be used only for text output format.

--profile Display timing and memory usage information.

--output-mode=OUTPUT-MODE Output format. Available options:

text - Default text output format, userfriendly and easy to read.

cron - Shortcut for crontab. It's basically focused on human-readable logs output.

It's combination of --timestamp --profile --stdout-only --no-progress -vv.

logstash - Logstash output format, for integration with ELK stack.

[default: "text"]

--cron Alias for --output-mode=cron. Deprecated!

-h, --help Display help for the given command. When no command is given display help for the list command

-q, --quiet Do not output any message

-V, --version Display this application version

--ansi|--no-ansi Force (or disable --no-ansi) ANSI output

-n, --no-interaction Do not ask any interactive question

-v|vv|vvv, --verbose Increase the verbosity of messages: 1 for normal output, 2 for more verbose output and 3 for debug

./csv-blueprint validate-schema --help

点击查看validate-schema帮助信息

Description:

Validate syntax in schema file(s).

Usage:

validate-schema [options]

Options:

-s, --schema=SCHEMA Specify the path(s) to the schema file(s), supporting YAML, JSON, or PHP formats.

Similar to CSV paths, you can direct to specific files or search directories with glob patterns.

Examples: /full/path/name.yml; p/file.yml; p/*.yml; p/**/*.yml; p/**/name-*.yml; **/*.yml

(multiple values allowed)

-Q, --quick[=QUICK] Stops the validation process upon encountering the first error,

accelerating the check but limiting error visibility.

Returns a non-zero exit code if any error is detected.

Enable by setting to any non-empty value or "yes".

[default: "no"]

-r, --report=REPORT Determines the report's output format.

Available options: text, table, github, gitlab, teamcity, junit

[default: "table"]

--dump-schema Dumps the schema of the CSV file if you want to see the final schema after inheritance.

--debug Intended solely for debugging and advanced profiling purposes.

Activating this option provides detailed process insights,

useful for troubleshooting and performance analysis.

--parallel[=PARALLEL] EXPERIMENTAL! Launches the process in parallel mode (if possible). Works only with ext-parallel.

You can specify the number of threads.

If you do not specify a value, the number of threads will be equal to the number of CPU cores.

By default, the process is launched in a single-threaded mode. [default: "1"]

--no-progress Disable progress bar animation for logs. It will be used only for text output format.

--mute-errors Mute any sort of errors. So exit code will be always "0" (if it's possible).

It has major priority then --non-zero-on-error. It's on your own risk!

--stdout-only For any errors messages application will use StdOut instead of StdErr. It's on your own risk!

--non-zero-on-error None-zero exit code on any StdErr message.

--timestamp Show timestamp at the beginning of each message.It will be used only for text output format.

--profile Display timing and memory usage information.

--output-mode=OUTPUT-MODE Output format. Available options:

text - Default text output format, userfriendly and easy to read.

cron - Shortcut for crontab. It's basically focused on human-readable logs output.

It's combination of --timestamp --profile --stdout-only --no-progress -vv.

logstash - Logstash output format, for integration with ELK stack.

[default: "text"]

--cron Alias for --output-mode=cron. Deprecated!

-h, --help Display help for the given command. When no command is given display help for the list command

-q, --quiet Do not output any message

-V, --version Display this application version

--ansi|--no-ansi Force (or disable --no-ansi) ANSI output

-n, --no-interaction Do not ask any interactive question

-v|vv|vvv, --verbose Increase the verbosity of messages: 1 for normal output, 2 for more verbose output and 3 for debug

./csv-blueprint dump-schema --help

点击查看debug-schema帮助信息

Description:

Show the internal representation of the schema taking into account presets.

Usage:

debug-schema [options]

Options:

-s, --schema=SCHEMA Specify the path to a schema file, supporting YAML, JSON, or PHP formats.

Examples: /full/path/name.yml; p/file.yml

-d, --hide-defaults Hide default values in the output.

--no-progress Disable progress bar animation for logs. It will be used only for text output format.

--mute-errors Mute any sort of errors. So exit code will be always "0" (if it's possible).

It has major priority then --non-zero-on-error. It's on your own risk!

--stdout-only For any errors messages application will use StdOut instead of StdErr. It's on your own risk!

--non-zero-on-error None-zero exit code on any StdErr message.

--timestamp Show timestamp at the beginning of each message.It will be used only for text output format.

--profile Display timing and memory usage information.

--output-mode=OUTPUT-MODE Output format. Available options:

text - Default text output format, userfriendly and easy to read.

cron - Shortcut for crontab. It's basically focused on human-readable logs output.

It's combination of --timestamp --profile --stdout-only --no-progress -vv.

logstash - Logstash output format, for integration with ELK stack.

[default: "text"]

--cron Alias for --output-mode=cron. Deprecated!

-h, --help Display help for the given command. When no command is given display help for the list command

-q, --quiet Do not output any message

-V, --version Display this application version

--ansi|--no-ansi Force (or disable --no-ansi) ANSI output

-n, --no-interaction Do not ask any interactive question

-v|vv|vvv, --verbose Increase the verbosity of messages: 1 for normal output, 2 for more verbose output and 3 for debug

./csv-blueprint create-schema --help 它处于测试阶段。工作正在进行中。

点击查看创建架构的帮助信息

Description:

Analyze CSV files and suggest a schema based on the data found.

Usage:

create-schema [options]

Options:

-c, --csv=CSV Specify the path(s) to the CSV files you want to analyze.

This can include a direct path to a file or a directory to search with a maximum depth of 10 levels.

Examples: p/file.csv; p/*.csv; p/**/*.csv; p/**/name-*.csv; **/*.csv

(multiple values allowed)

-H, --header[=HEADER] Force the presence of a header row in the CSV files. [default: "yes"]

-L, --lines[=LINES] The number of lines to read when detecting parameters. Minimum is 1. [default: 10000]

-C, --check-syntax[=CHECK-SYNTAX] Check the syntax of the suggested schema. [default: "yes"]

-r, --report=REPORT Determines the report's output format.

Available options: text, table, github, gitlab, teamcity, junit

[default: "table"]

--dump-schema Dumps the schema of the CSV file if you want to see the final schema after inheritance.

--debug Intended solely for debugging and advanced profiling purposes.

Activating this option provides detailed process insights,

useful for troubleshooting and performance analysis.

--parallel[=PARALLEL] EXPERIMENTAL! Launches the process in parallel mode (if possible). Works only with ext-parallel.

You can specify the number of threads.

If you do not specify a value, the number of threads will be equal to the number of CPU cores.

By default, the process is launched in a single-threaded mode. [default: "1"]

--no-progress Disable progress bar animation for logs. It will be used only for text output format.

--mute-errors Mute any sort of errors. So exit code will be always "0" (if it's possible).

It has major priority then --non-zero-on-error. It's on your own risk!

--stdout-only For any errors messages application will use StdOut instead of StdErr. It's on your own risk!

--non-zero-on-error None-zero exit code on any StdErr message.

--timestamp Show timestamp at the beginning of each message.It will be used only for text output format.

--profile Display timing and memory usage information.

--output-mode=OUTPUT-MODE Output format. Available options:

text - Default text output format, userfriendly and easy to read.

cron - Shortcut for crontab. It's basically focused on human-readable logs output.

It's combination of --timestamp --profile --stdout-only --no-progress -vv.

logstash - Logstash output format, for integration with ELK stack.

[default: "text"]

--cron Alias for --output-mode=cron. Deprecated!

-h, --help Display help for the given command. When no command is given display help for the list command

-q, --quiet Do not output any message

-V, --version Display this application version

--ansi|--no-ansi Force (or disable --no-ansi) ANSI output

-n, --no-interaction Do not ask any interactive question

-v|vv|vvv, --verbose Increase the verbosity of messages: 1 for normal output, 2 for more verbose output and 3 for debug

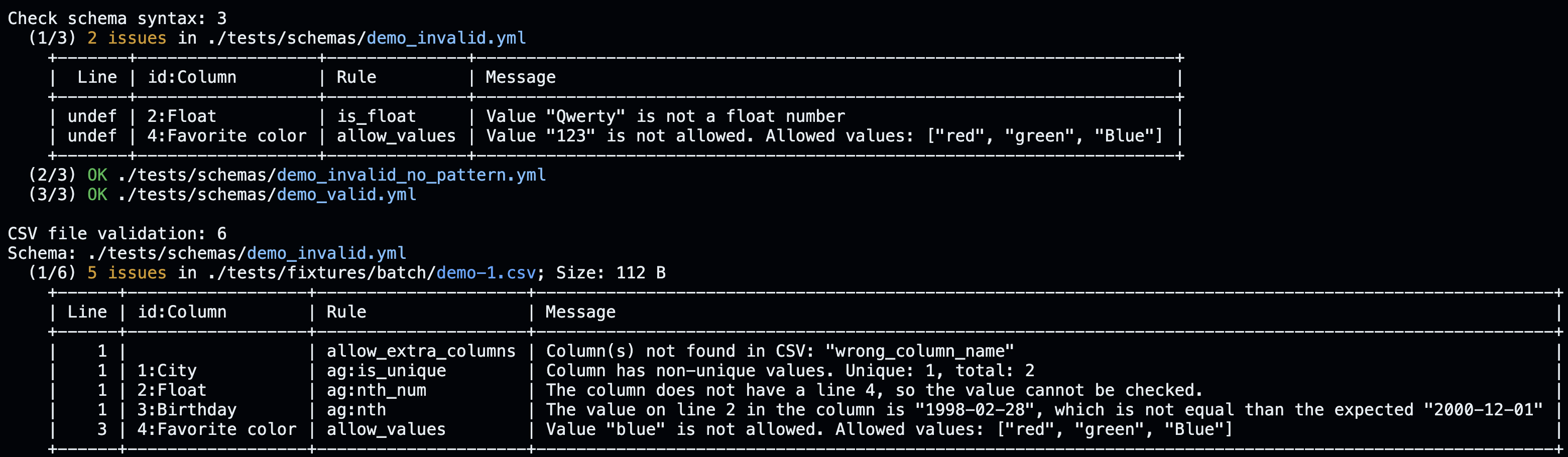

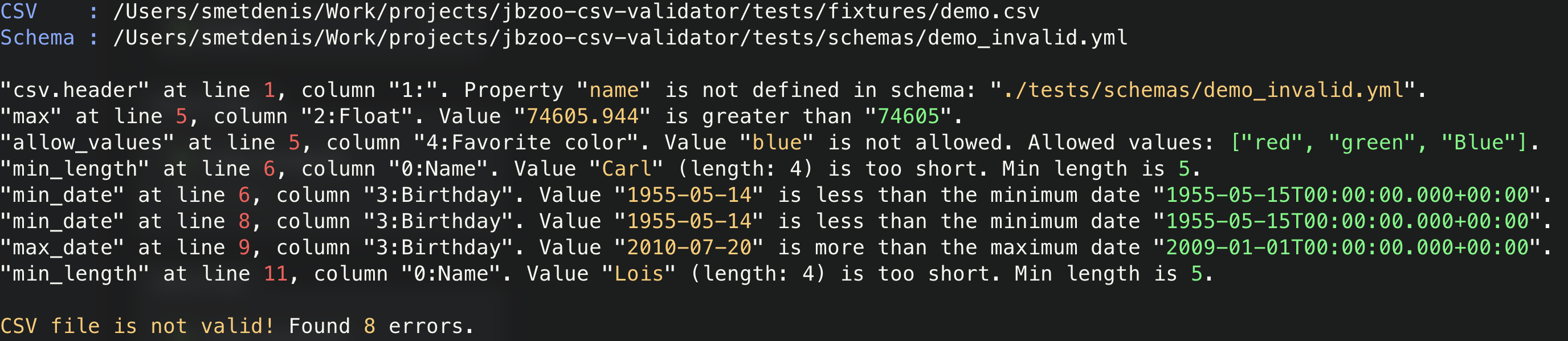

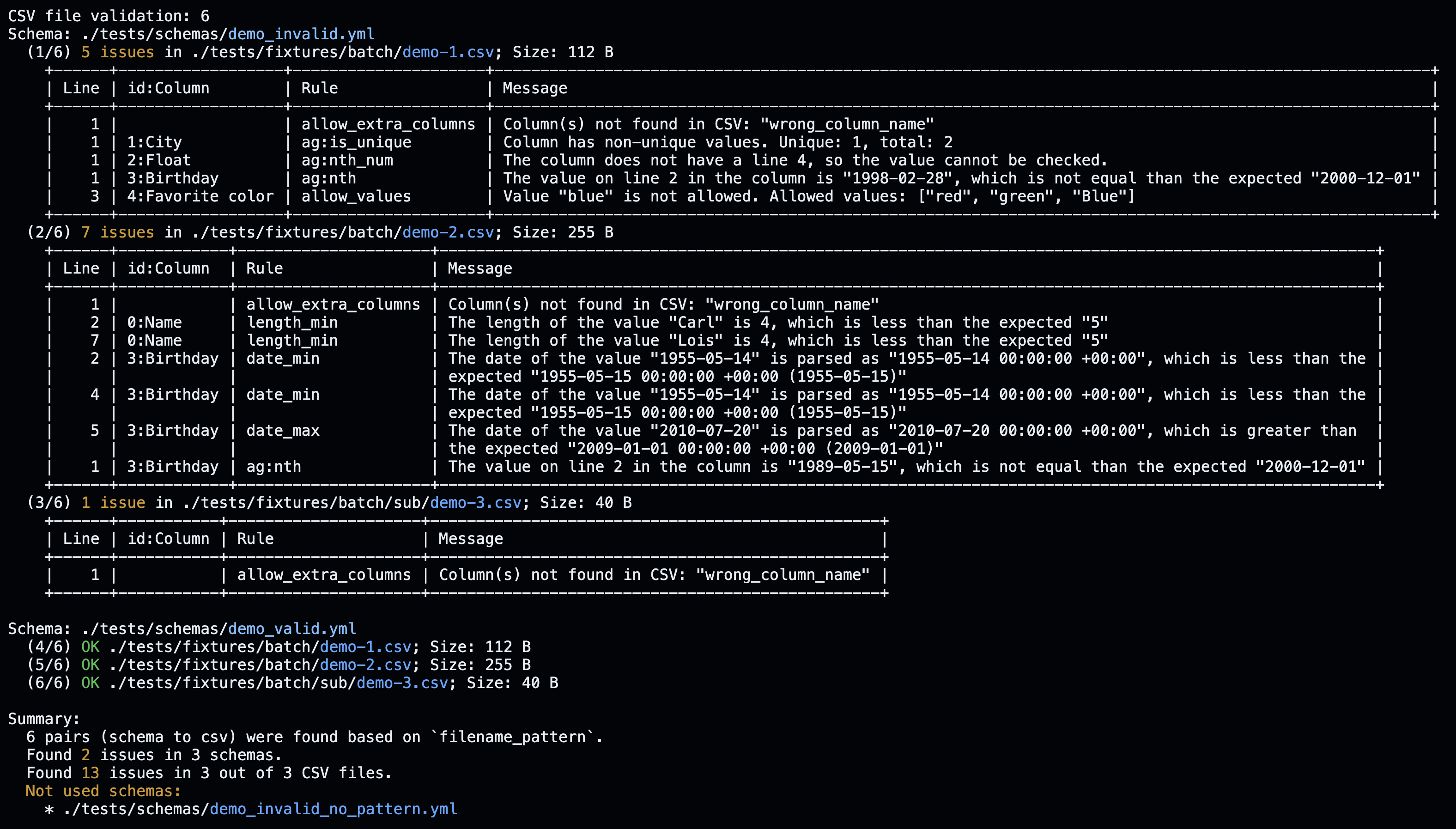

报告示例

验证过程最终生成一份易于阅读的报告,详细说明在CSV文件中发现的任何错误。虽然默认报告格式是表格,但该工具支持各种输出格式,包括文本、GitHub、GitLab、TeamCity、JUnit等,以最好地满足您的项目需求和您个人的或团队的首选。

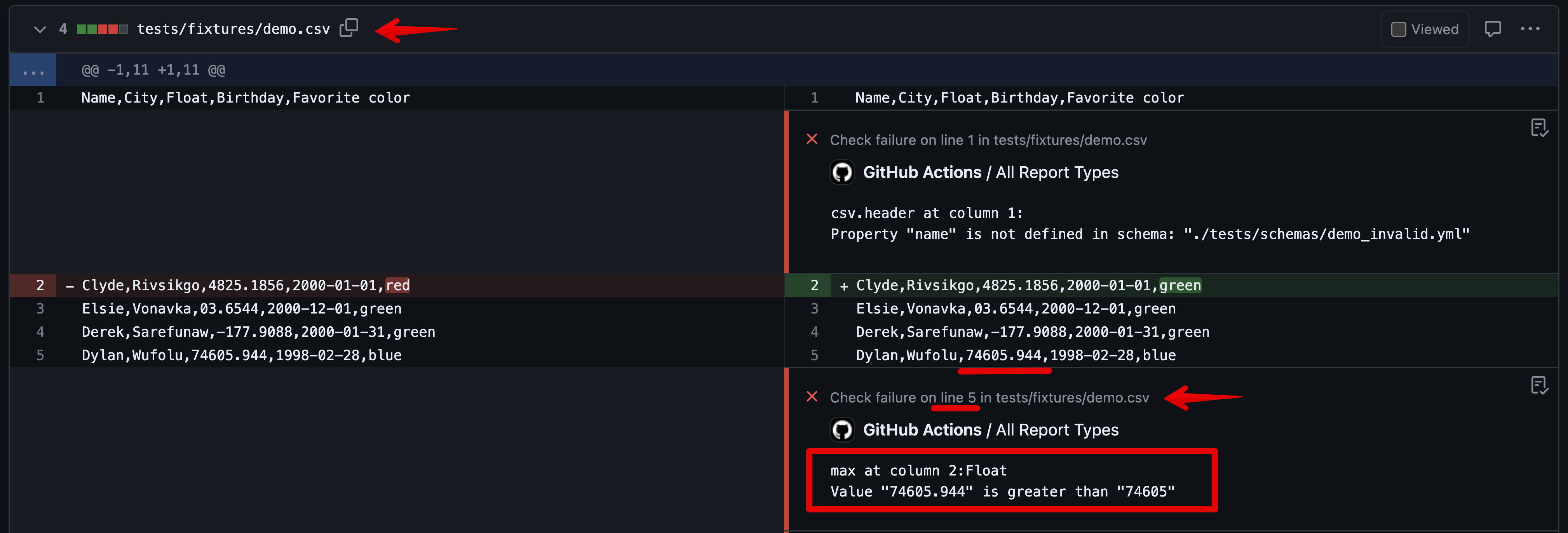

GitHub动作格式

要查看在拉取请求(PR)中的用户友好错误输出,请指定report: github。这利用了注释在PR级别直接在GitHub界面突出显示错误。此功能允许错误在CSV文件中的确切位置显示,就在您的拉取请求的diff中。要查看实际示例,请参阅此实时演示PR。

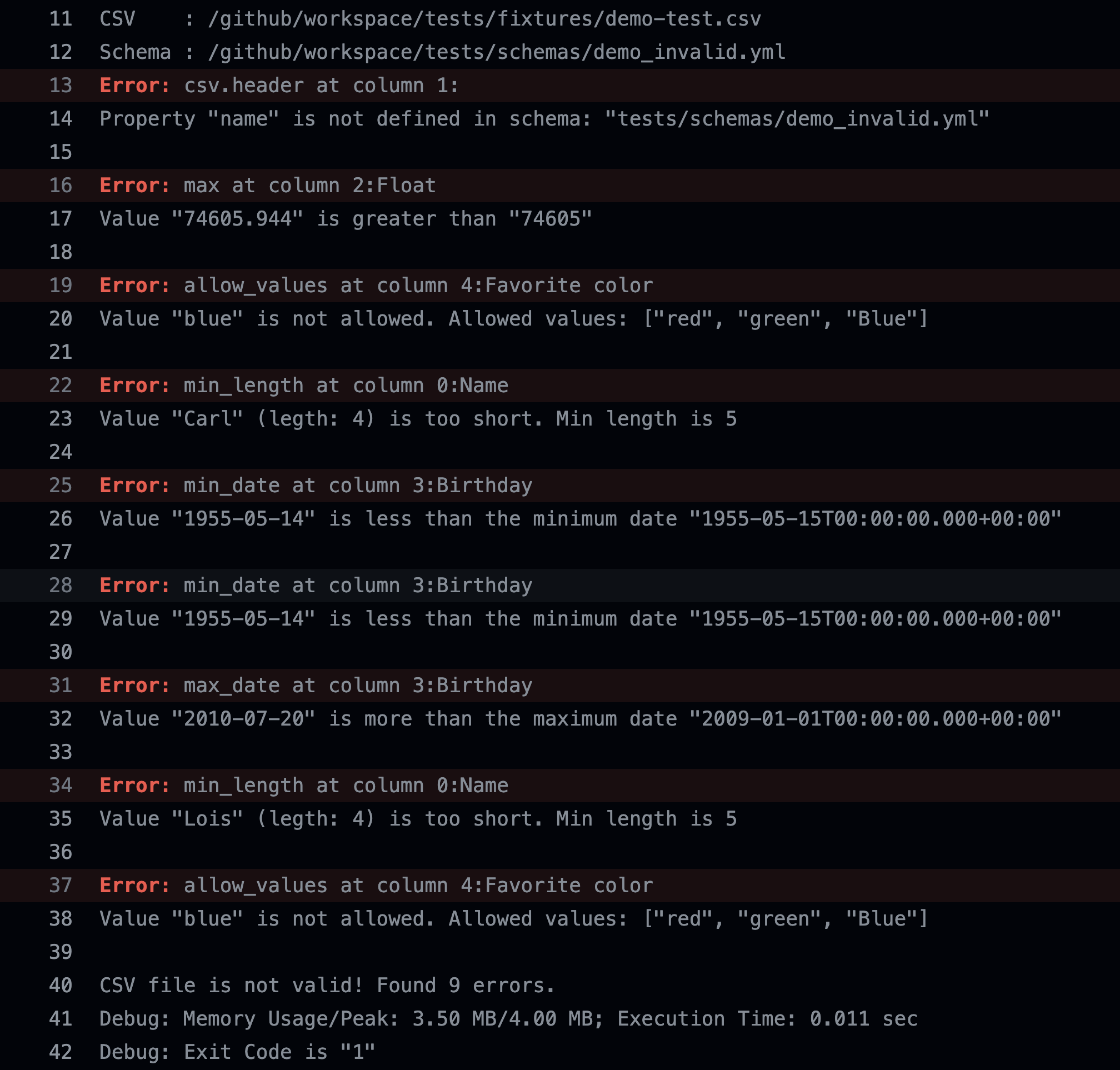

文本格式

可选格式text带有高亮关键字,以便快速导航。

表格格式

使用默认的table格式时,输出以清晰、易于理解的方式组织在表格中,列出所有发现的错误。此格式非常适合快速审查并与团队成员共享以进行进一步操作。

注意

- GitHub动作的默认报告格式是

table。 - 工具使用JBZoo/CI-Report-Converter作为SDK将报告转换为不同格式。因此,您可以轻松地将它与任何CI系统集成。

基准测试

了解此工具的性能至关重要,但需要注意的是,其效率受多个关键因素的影响

- 文件大小:CSV文件的尺寸,无论是行还是列,都会直接影响处理时间。性能与文件大小成线性关系,并取决于您的硬件能力,如CPU和SSD速度。

- 规则数量:每列的验证规则越多,处理迭代就越多。每个规则独立操作,因此总时间和内存消耗是所有规则的累积。

- 规则强度:尽管大多数验证规则针对速度和低内存使用进行了优化,但有些,如

interquartile_mean,可能会显著较慢。例如,interquartile_mean可能每秒处理约4,000行,而其他规则可以处理高达每秒5,000万行。

然而,为了获得对性能的一般了解,请参阅下表。

- 所有测试都是在包含

200万行以上以及一个额外标题行的数据集上进行的。 - 这些结果是从当前最新版本得出的,如使用GitHub Actions(查看workflow.yml)运行的测试所验证的。该链接提供了访问各种构建的链接,这对于不同的测试场景和实验至关重要。最代表性的数据可以在

Docker (latest, XX)下找到。 - 这些测试激活了开发者模式,使用标志

-vvv --debug --profile。 - 测试环境包括最新的Ubuntu + Docker。有关GitHub Actions(GA)硬件的更多信息,请查看GA硬件详情。

- 主要性能指标是处理速度,以每秒行数衡量。请注意,速度以每秒千行数表示(

100K等于100,000行每秒)。 - 每个测试用例持续时间内的峰值RAM使用情况作为额外的性能指标。

配置文件

- 最快:专注于最快的规则,无论是单元格还是聚合,提供基准。

- 最小值:使用一组常规性能规则,每种规则有两个实例。

- 现实情况:代表典型用例中可能遇到的规则混合。

- 所有聚合:同时测试所有聚合规则,说明最大负载。

分区

- 单元格规则:仅测试单个单元格验证规则。

- 聚合规则:专注于列级别的聚合规则。

- 单元格 + 聚合:结合单元格和聚合规则进行全面验证。

- 峰值内存:指示最大RAM使用量,在具有聚合规则的场景中尤其相关。

注意:《峰值内存》指标主要在聚合规则使用时感兴趣,因为非聚合场景通常不需要超过2-4兆内存,无论文件大小或规则数量。

这些基准测试提供了一个工具在各种场景下的能力快照,帮助您判断其是否适合您的特定CSV验证需求。

其他基准测试见解

当在不同的硬件配置上运行相同的验证测试时,工具的性能可能会有很大差异。值得注意的是,在 MacBook 14" M2 Max(2023)上测试的结果大约是GitHub Actions硬件上观察到的结果的两倍。这表明该工具在现代高性能设备上的卓越性能。

相反,在 MacBook Pro(2019)上进行的测试,其配备2.4 GHz的Intel处理器,与GitHub Actions的结果非常接近,表明提供的基准表反映了典型工程硬件的平均性能水平。

简短结论

-

单元格规则:这些规则对CPU非常密集,但需要最小的RAM,通常在峰值时为1-2 MB。应用到列中的单元格规则越多,验证过程所需时间越长,因为每个值都需要执行额外的操作。

-

聚合规则:这些规则运行速度极快,每秒可以处理从1000万到数十亿的行。然而,它们对RAM的需求很大。有趣的是,添加超过100个不同的聚合规则并不会显著增加内存消耗。

-

PHP数组函数:并非所有PHP数组函数都可以按引用操作(

&$var)。是否可以以这种方式操纵列中的数据集高度依赖于特定的算法。例如,一个20 MB的数据集可能在处理过程中被复制,导致峰值内存使用量为40 MB。因此,依赖于通过引用传递数据的优化技术通常无效。 -

实际影响:如果处理1 GB文件在30-60秒内是可接受的,并且有200-500 MB的RAM可用,那么可能没有必要过度关注这些性能考虑。

-

内存管理:在整个测试过程中,没有观察到内存泄漏。

CSV文件示例

用于基准测试的CSV文件描述如下。这些文件最初使用PHP Faker生成前2000行。随后,它们在自身内复制1000次,从而在几秒钟内创建出显著的大随机文件。

在这些文件中观察到的一个关键原则是,随着列数的增加,这些列中的值的长度也倾向于增加,遵循类似指数增长的模式。

列数:1,大小:8.48 MB

id

1

2

列数:5,大小:64 MB

id,bool_int,bool_str,number,float

1,0,false,289566,864360.14285714

2,1,true,366276,444761.71428571

列数:10,大小:220 MB

id,bool_int,bool_str,number,float,date,datetime,domain,email,ip4

1,1,true,779914,1101964.2857143,2011-02-04,"2000-03-02 00:33:57",erdman.net,germaine.brakus@yahoo.com,32.51.181.238

2,0,true,405408,695839.42857143,1971-01-29,"1988-08-12 21:25:27",bode.com,tatyana.cremin@yahoo.com,76.79.155.73

列数:20,大小:1.2 GB

id,bool_int,bool_str,number,float,date,datetime,domain,email,ip4,uuid,address,postcode,latitude,longitude,ip6,sentence_tiny,sentence_small,sentence_medium,sentence_huge

1,1,false,884798,1078489.5714286,2006-02-09,"2015-12-07 22:59:06",gerhold.com,alisa93@barrows.com,173.231.203.134,5a2b6f01-0bac-35b2-bef1-5be7bb3c2d78,"776 Moises Coves Apt. 531; Port Rylan, DC 80810",10794,-69.908375,136.780034,78cb:75d9:4dd:8248:f190:9f3c:b0e:9afc,"Ea iusto non.","Qui sapiente qui ut nihil sit.","Modi et voluptate blanditiis aliquid iure eveniet voluptas facilis ipsum omnis velit.","Minima in molestiae nam ullam voluptatem sapiente corporis sunt in ut aut alias exercitationem incidunt fugiat doloribus laudantium ducimus iusto nemo assumenda non ratione neque labore voluptatem."

2,0,false,267823,408705.14285714,1985-07-19,"1996-11-18 08:21:44",keebler.net,wwolff@connelly.com,73.197.210.145,29e076ab-a769-3a1f-abd4-2bc73ab17c99,"909 Sabryna Island Apt. 815; West Matteoside, CO 54360-7141",80948,7.908256,123.666864,bf3b:abab:3dcb:c335:b1a:b5d6:60e9:107e,"Aut dolor distinctio quasi.","Alias sit ut perferendis quod at dolores.","Molestiae est eos dolore deserunt atque temporibus.","Quisquam velit aut saepe temporibus officia labore quam numquam eveniet velit aliquid aut autem quis voluptatem in ut iste sunt omnis iure laudantium aspernatur tenetur nemo consequatur aliquid sint nostrum aut nostrum."

在本地运行基准测试

请确保您已安装 PHP 8.2+ 和 Docker。

# Clone the latest version git clone git@github.com:jbzoo/csv-blueprint.git csv-blueprint cd csv-blueprint # download dependencies and build the tool. make build # We need it to build benchmark tool. See `./tests/Benchmarks` folder. make build-phar-file # Optional. Only if you want to test it. make docker-build # Recommended. local tag is "jbzoo/csv-blueprint:local" # Create random CSV files with 5 columns (max: 20). BENCH_COLS=5 make bench-create-csv # Run the benchmark for the recent CSV file. BENCH_COLS=5 make bench-docker # Recommended BENCH_COLS=5 make bench-phar BENCH_COLS=5 make bench-php # It's a shortcut that combines CSV file creation and Docker run. # By default BENCH_COLS=10 make bench

缺点?

PHP 本质上慢的看法是一个常见的误解。然而,通过正确的优化策略,PHP 可以表现出色。证据请参考文章 在 PHP 中处理十亿行 CSV 数据!,它展示了 PHP 可以以大约 每秒 1500 万行 的速度处理、聚合和计算 CSV 文件中的数据!虽然并非所有优化都已实施,但性能已经相当令人印象深刻。

- 是的,承认这个工具可能不是最快的,但也不是最慢的。更多详情,请参阅上面的链接。

- 是的,该工具是用 PHP 编写的——不是 Python、Go 或 PySpark,这些可能不是这类任务的首选。

- 是的,它像是一个独立的二进制文件。建议只是使用它,而不要过分思考其内部工作。

- 是的,认识到这不能作为管道中的 Python SDK 使用。

然而,在大多数场景中,这些并不是决定性的问题。该实用工具有效地解决了在持续集成(CI)环境中验证 CSV 文件的问题。👍

这个实用工具设计为即用型,无需深入了解其内部机制。它遵循严格的测试标准,包括严格的类型检查,大约有七种 linters 和静态分析器在最高规则级别。此外,每个 pull request 都在 GitHub Actions 上接受大约十种不同的检查,覆盖了 PHP 版本和模式的矩阵,确保其健壮性。广泛的覆盖和预防措施是由于使用条件的不可预测性,体现了开源社区的精神。

总的来说,该工具是按照现代 PHP 的最高标准开发的,确保其按预期运行。

即将推出

这只是随意的想法和计划。没有承诺和截止日期。随时帮助我!。

点击查看路线图

-

批量处理

- 如果没有指定

--csv选项,则使用 STDIN。用于在类 Unix 系统中构建管道。

- 如果没有指定

-

验证

- 多

filename_pattern。支持正则表达式列表。 - 单单元格中的多值。

- 作为回调的自定义单元格规则。当您有一个复杂的规则,无法在模式文件中描述时很有用。

- 作为回调的自定义聚合规则。当您有一个复杂的规则,无法在模式文件中描述时很有用。

- 可配置的空/空值关键字。默认为空字符串。但您可以使用

null、nil、none、empty等。可以在列级别上覆盖。 - 处理空文件、只有标题行的文件或只有一行数据的文件。没有标题的一列也是可能的。

- 如果没有指定

--schema选项,则仅验证超级基础级别的内容(例如,“它是不是 CSV 文件?”)。 - 复杂规则(例如,“如果字段

A不为空,则字段B也应该不为空”)。 - 通过自定义规则和自定义报告格式进行扩展。插件?

- 输入编码检测 +

BOM(目前为实验性)。它工作,但不是很准确... 目前 UTF-8 是最佳选择。

- 多

-

性能和优化

- 使用 向量 而不是数组来优化内存使用和访问速度。

- 支持多线程,以便并行验证 CSV 的列。

-

模拟数据生成

- 根据模式创建 CSV 文件(例如,“根据模式和规则创建 1000 行随机数据”)。

- 使用 Faker 生成随机数据。

- ReverseRegex 从正则表达式生成文本。

-

报告

- 更多报告格式(如JSON、XML等)。有什么想法吗?

- Gitlab和JUnit报告必须采用相同结构。这并不容易实现。但这是个好主意。

- 将多个CSV文件合并成一个报告。当您有大量文件且希望在一个地方查看所有错误时很有用。特别是对于GitLab和JUnit报告。

-

杂项

- 用Go/Rust重写。拥有一个具有相同功能的独立二进制文件是个好主意。

- 在MacOS上通过brew安装。

- 在Ubuntu上通过apt安装。

- 用作PHP SDK。示例在Readme中。

- 关于已弃用选项和功能的警告。

- 添加选项

--recomendation以显示针对模式或CSV文件或模式中潜在问题的推荐规则列表。当您不确定要使用哪些规则时很有用。 - 添加选项

--error=[level]以仅显示具有特定级别的错误。当您有很多警告且只想看到错误时很有用。 - 更多示例和文档。

PS. 这里有一个文件包含了我的想法和想象力。这不是有效的模式文件,只是一个草案。我不确定我会实现其中的所有功能。但我会尽力而为。

贡献

如果您有任何想法或建议,请随时提出问题或创建pull请求。

# Fork the repo and build project git clone git@github.com:jbzoo/csv-blueprint.git ./jbzoo-csv-blueprint cd ./jbzoo-csv-blueprint make build # Make your local changes # Autofix code style make test-phpcsfixer-fix test-phpcs # Run all tests and check code style make test make codestyle # Create your pull request and check all tests in CI (Github Actions) # ??? # Profit!

许可协议

MIT许可证:它就像免费的披萨 - 享受它,分享它,只是不要将其作为自己的出售。记住,没有关于胃痛的保证! 😅

另请参阅

- Cli - 框架帮助创建复杂的CLI应用程序,并为Symfony/Console提供新工具。

- CI-Report-Converter - 它将不同错误报告标准转换为流行的CI系统。

- Composer-Diff - 查看

composer update后包的变化。 - Composer-Graph - 基于

composer.json的依赖关系图可视化,使用Mermaid JS。 - Mermaid-PHP - 使用

mermaid脚本语言生成图表和流程图。 - Utils - 收集有用的PHP函数、迷你类和代码片段,供日常使用。

- Image - 包提供以尽可能简单的方式操作图像的面向对象方法。

- Data - 扩展实现ArrayObject。使用Yml/PHP/JSON/INI文件作为配置。忘记数组。

- Retry - 提供重试/退避功能的微型PHP库,具有策略和抖动。

有趣的事实

我实现了一个个人里程碑。该项目的初始版本是在大约3天内从头开始制作的,期间穿插着照顾4个月大的婴儿的常规休息时间。回顾第一次提交和最早的git标签,很明显,这是在周末完成的,利用个人笔记本电脑的空闲时间。